(Outdated)

Some old videos

Outdated (last updated in 2020): O-DU HIGH - NearRTRIC E2 setup: odu-high.mp4

Outdated (last updated in 2020): A1 flow: a1.mp4

Outdated (last updated in 2020): O1 flow: o1.mp4

A1 flow

This information is outdated (not updated since 2020):

Prerequisites: RIC and SMO Installation completed.

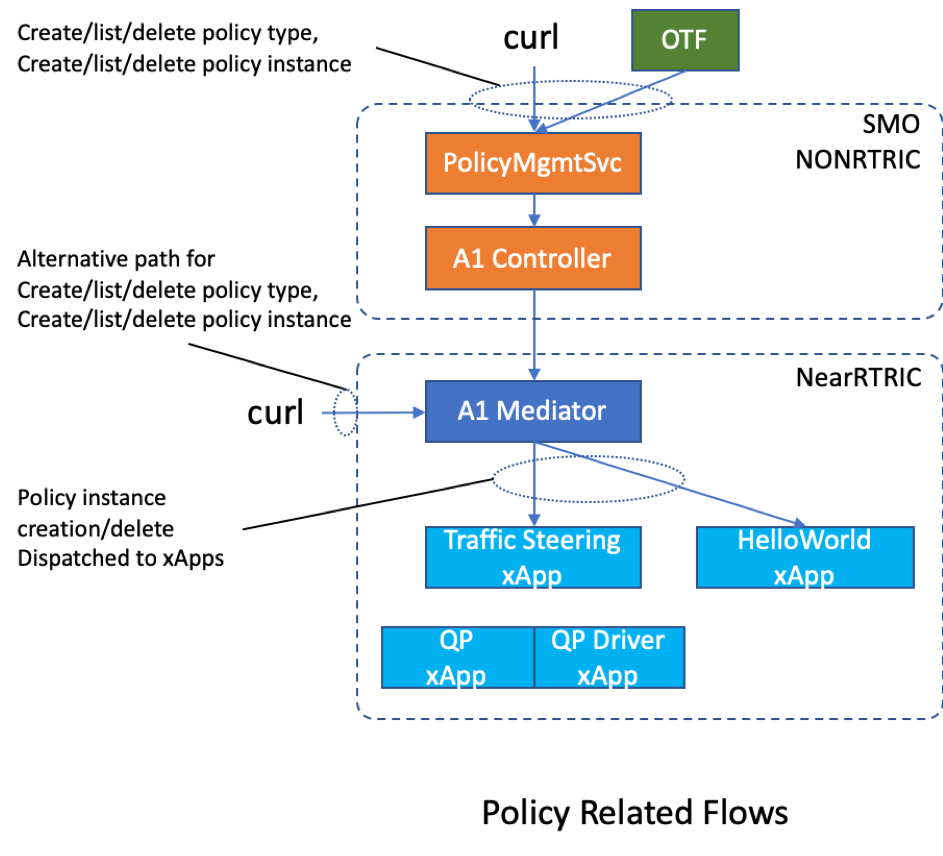

The SMO A1 (policy) flow is almost identical to what we have shown in the xApp (Traffic Steering) flow, with the difference that the policy operations (i.e. policy type creation, policy instance creation) are coming from the SMO instead of directly calling A1 mediator API.

In SMO cluster, the Near RT RIC's coordinates (IP, port, etc) is specified in the recipe for the NonRTRIC. To check whether SMO has successfully established communication with Near RT RIC,

Run the following on the SMO cluster:

$ curl http://$(hostname):30093/rics

To create a new policy instance under policy type 20008 (Traffic Steering's threshold policy),

Run:

$ POLICY_TYPE_ID="20008"

$ curl -v i-X PUT --header "Content-Type: application/json" \

"http://$(hostname):30091/policy?id=FROMOTF&ric=ric1&service=dummyService&id=${POLICY_TYPE_ID}" \

--data "{\"threshold\" : 1}"

O1 flow

This information is outdated (not updated since 2020):

47 Comments

Gopalasingham Aravinthan

Hi,

Thank a lot for the getting started guide.

I have successfully deployed the near RT RIC cluster and the policy instance creation works via near RT RIC.

But I have few issues with SMO cluster.

when I test the RIC connectivity via curl http://$(hostname):30093/rics (It did not work with port number 30091 )

I receive the following output [{"ricName":"ric1","managedElementIds":["kista_1","kista_2"],"policyTypes":[],"state":"UNAVAILABLE"}

I think, in this case SMO did not establish a connection with nRT RIC, is it so?

Also I am not successful with creation of new policy instance via SMO cluster.

Also I checked and all the pods of onap and nonrttic namespace are deployed successfully in the cluster as mentioned in the getting started guide.

Could you please advise me, what could be the issue here.

Many Thanks

user-d3360

Hi Aravinthan,

Sorry we are still in the process of updating the instructions. What's currently missing is how to configure Non RT RIc to talk to Near RT RIC. Before we add the steps to instruction, or the script, here is something for you to try now:

Non RT RIC:

Gopalasingham Aravinthan

Hi Lusheng,

Many Thanks for your quick response.

Perfect, it works well after following the above steps you provided.

Thanks again

Pavan Gupta

Hi Lusheng,

Are these steps still valid for the Cherry release. In my case, I have 2 VMs on AWS with SMO cluster on one and RTRIC on another. if I try 'wget http://node-ip-ric-cluster:32080/a1mediator', it doesn't work.

Also, I think a1mediator is changed for the Cherry release, meaning it can't be used in the url. can you please confirm this understanding?

Zhengwei Gao

hi all,

the instructions for a1 flow are somehow incomplete or even misleading.

for connecting nonrtric to ricplt, and for policy management using nonrtric-controlpanel, pls refer to my blog: https://blog.csdn.net/jeffyko/article/details/107975572

for policy management using a1-policy-agent(aka, policymanagementservice), and using a1-controller, pls refer to: https://blog.csdn.net/jeffyko/article/details/107991536

hope this can be helpful. gl.

Pavan Gupta

Hi Zhengwei,

The 2 links you have shared for nonrtric-ricplt & policymgmt using a1 ctrl are for the Bronze release. I am using Cherry release and example_reciple.yaml for nonrtric seems to little different.

The baseurl is: "baseUrl":"https://a1-sim-osc-0.a1-sim:8185"

I am trying to figure out changes for Cherry, but in case you have ready info, kindly share the same.

Anonymous

Hey ,

have u figured out yet?

I have the same problem . if u have figured out this problem , could u tell me the cherry version where the ric's ip should I modify?

Thanks.

Anonymous

I have same problem.

and there is no documents or guide for modifying IP in nonrtric recipe.

It's really upset.

Michael Duan

Hi,

could someone provide the detailed instructions for O1 flow?

Thanks

user-d3360

The demo scripts can be found under it/dep repo, under the demos directory.

user-d3360

Please post after you log in so people know who you are.

Michael Duan

Hi, all

I have installed Near-RT RIC, and tried to run odu-high.sh

After setting RIC host and odu host, and code compilation, I stuck in the process as below, do anyone can help to solve it?

(Are these two errors are resulted from the codes which try to connect to O-CU and other component, as mentioned in odu-high demo video ?)

###############

...

Sending Scheduler config confirm to DU APP

DU_APP : Received SCH CFG CFM at DU APP

DU_APP : Configuring all Layer is complete

date: 08/27/2020 time: 07:42:58

mtss(posix): sw error: ent: 255 inst: 255 proc id: 000

file: /root/dep/demos/bronze/l2/src/cm/cm_inet.c line: 1877 errcode: 00007 errcls: ERRCLS_DEBUG

errval: 00000 errdesc: cmInetSctpBindx() Failed : error(99), port(38472), sockFd->fd(4)

SCTP: Failed during Binding in ODU

SCTP : ASSOC Req Failed.

DU_APP : Failed to process the request successfully

SCTP : Polling started at DU

DU_APP : Failed to send AssocReq F1

date: 08/27/2020 time: 07:42:58

mtss(posix): sw error: ent: 255 inst: 255 proc id: 000

file: /root/dep/demos/bronze/l2/src/cm/cm_inet.c line: 1877 errcode: 00007 errcls: ERRCLS_DEBUG

errval: 00000 errdesc: cmInetSctpBindx() Failed : error(99), port(32222), sockFd->fd(5)

SCTP: Failed during Binding in ODU

SCTP : ASSOC Req Failed.

DU_APP : Failed to process the request successfully

DU_APP : Failed to send AssocReq E2

DU_APP : Sending EGTP config request

EGTP : EGTP configuration successful

user-d3360

Michael,

It appears that the problem is the SCTP socket.

This version of the O-DU HIGH requires the IP addresses of the both ends of the SCTP connection (i.e. O-DU HIGH and Near RT RIC) to be compiled into the binary. In other words, you would need to modify some IP addresses in src/du_app/du_cfg.h: DU_IP_V4_ADDR for the O-DU's IP local address to bind SCTP socket and RIC_IP_V4_ADDR for the peer IP to establish SCTP socket. If you have not done so, you would need to make the modification based on your local setup's IP addresses then recompile.

Now in the cloud native world, identifying the right IP address could be tricky. For example, the DU_IP_V4_ADDR is the NIC address of the host running the O-DU code (assuming you are running the binary on host). However, the RIC_IP_V4_ADDR should the external IP address of the k8s cluster running the Near RT RIC.

Hope this helps.

Lusheng

Anonymous

Hi, Lusheng

Thank you for your help, it works for me

BhanuPrakash Ramisetti

Hi All,

I have established SCTP connection between RIC (E2 termination) and O-DU High and able to send E2 setup request from O-DU High to RIC (E2 termination) and able to get response from RIC to O-DU High. Now, How can I communicate from O-DU High (sending data) to deployed xApp (say TS xApp) through E2 termination and vice versa?

Pavan Gupta

Hi,

If anyone has tried A1 flow from nonRT-RIC (SMO) to RT-RIC on Cherry or bronze release using the following command, please share if the following command worked for you. Also, if you had to make any changes to it to make it run, kindly share the same.

Cedric Morin

Hi,

We tried the A1 demo with both Bronze and Cherry and it works well.

We faced some issues with the correct values to enter for each field (port number ...) so I suggest you check all the values used (see below).

Also our command is sligthly different, I don't know if this changes anything.

Here is what we have done :

Regards,

Cedric

Pavan Gupta

Hi Cedric,

Many thanks for your response. Can you please also share how have you configured near RT params in the SMO. Have you made any changes to the example_recipe.yaml file. Can you please share those changes.

I am suspecting that my SMO and rtric that run on AWS are unable to talk to each other. Private IP address of each is reachable from the other. Policymanagement service should be able to talk to kong-proxy running on port 32080 on rtric cluster. Please let me know if my understanding is correct.

Regards,

Pavan

Cedric Morin

Hi,

We did not use AWS, we used physical machines, so I cannot help you with AWS related problems. However indeed the two should be able to talk using the near RT RIC kong port.

Regarding example_recipe.yaml modification, we took one of the "fake" RICs that were listed in the file, and we just changed its "baseUrl" to : "http://10.51.0.20:32080/a1mediator" (http and not https).

Be also careful that the name you give here to the RIC is consistent with the RIC_NAME of my previous answer

kubectl get service -n ricplt r4-infrastructure-kong-proxy -o json | jq '.spec.ports[] | select (.name == "kong-proxy") | .nodePort'

In the end from your SMO you should be able to see your RIC : curl http://10.51.0.22:30093/rics | jq .

Note : once I got an issue because the Kong port was not open. You can check this : ss -tulw | grep 32080

Best regards,

Cedric

(to answer to your other question yes we see the A1 flow)

ak47885395@yahoo.com.tw

Hi Cedric:

Could you tell me how do you open your Kong port? my state of Kong port is filtered.

Cedric Morin

Hi,

I think I just rebooted my computer. As far as I remember it was just busy due to the differents attempts I made. You may try this, but "filtered" sems to be a more problematic issue, related to configuration problems. I do not know how to solve it.

ak47885395@yahoo.com.tw

Hi,

Thank you for the prompt reply.

I just change the baseUrl from HTTPS to HTTP. With the Kong port still filtered, the Policy Control of my Control Panel can interact with my a1mediator so I think the "filtered" state isn't the actual reason causing failed connection with non-rt RIC and near-rt RIC.

However, I am wondering how to test if near-rt ric can transmit some data to the EI Service in non-rt RIC? Could you give me some suggestions for that?

I will be grateful for any help you can provide.

Cedric Morin

Indeed as I said in my previous answer it doesn't work with HTTPS, it took us some time to understand this.

I can't help you with the EI service, we haven't used it so far. Regarding SMO-RIC communication I only focused on the areas covered by the demos : discovery, policy information exchange, and monitoring.

(just in case you didn't find them, the demos scripts are under [cherry_code]/dep/demos/bronze)

Cedric Morin

Hi,

Has anyone tried the alarm demo with the Cherry release?

We successfully tried this demo using the bronze version, but with the Cherry version we couldn't find ONAP SDNC component in the SMO anymore. We however managed to run all the other demos related to SMO, nearRT RIC, and connection between the two, using the cherry release.

In order to install the cherry version of the SMO we first installed the ricaux components (that comes with some ONAP pods), then the nonrtric, using the script available at oran_cherry/dep/bin, and the corressponding receipes available at oran_cherry/receipe_example.

In order to get the alarm demo working :

Thank you for your responses,

Best regards,

Cedric

Pavan Gupta

Hi Cedric,

I could install SDNC, please check the output below:

root@ip-172-31-35-21:~# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-5d4dd4b4db-55phw 1/1 Running 5 6d7h

kube-system coredns-5d4dd4b4db-m44j7 1/1 Running 5 6d7h

kube-system etcd-ip-172-31-35-21 1/1 Running 5 6d7h

kube-system kube-apiserver-ip-172-31-35-21 1/1 Running 5 6d7h

kube-system kube-controller-manager-ip-172-31-35-21 1/1 Running 5 6d7h

kube-system kube-flannel-ds-g5c8f 1/1 Running 5 6d7h

kube-system kube-proxy-cwkpb 1/1 Running 5 6d7h

kube-system kube-scheduler-ip-172-31-35-21 1/1 Running 5 6d7h

kube-system tiller-deploy-7c54c6988b-9gv7t 1/1 Running 5 6d7h

nonrtric a1-sim-osc-0 1/1 Running 4 6d5h

nonrtric a1-sim-osc-1 1/1 Running 4 6d5h

nonrtric a1-sim-std-0 1/1 Running 4 6d5h

nonrtric a1-sim-std-1 1/1 Running 4 6d5h

nonrtric a1controller-64c4f59fb5-kdk6n 0/1 Running 4 6d5h

nonrtric controlpanel-5ff4849bf-bvzjk 1/1 Running 4 6d5h

nonrtric db-549ff9b4d5-7hznj 1/1 Running 4 6d5h

nonrtric enrichmentservice-8578855744-9t5qm 1/1 Running 5 6d5h

nonrtric policymanagementservice-77c59f6cfb-jwjzs 1/1 Running 4 6d5h

nonrtric rappcatalogueservice-5945c4d84b-snppk 1/1 Running 4 6d5h

onap dev-consul-68d576d55c-xckf8 1/1 Running 4 6d5h

onap dev-consul-server-0 1/1 Running 4 6d5h

onap dev-consul-server-1 1/1 Running 4 6d5h

onap dev-consul-server-2 1/1 Running 4 6d5h

onap dev-kube2msb-9fc58c48-cfl6k 0/1 Error 8 6d5h

onap dev-mariadb-galera-0 0/1 Running 668 6d5h

onap dev-mariadb-galera-1 0/1 Running 669 6d5h

onap dev-mariadb-galera-2 0/1 Running 666 6d5h

onap dev-message-router-0 0/1 Completed 15 6d5h

onap dev-message-router-kafka-0 0/1 Init:1/4 1 6d5h

onap dev-message-router-kafka-1 0/1 Running 10 6d5h

onap dev-message-router-kafka-2 0/1 Init:1/4 1 6d5h

onap dev-message-router-zookeeper-0 0/1 Running 4 6d5h

onap dev-message-router-zookeeper-1 0/1 Running 4 6d5h

onap dev-message-router-zookeeper-2 0/1 Running 4 6d5h

onap dev-msb-consul-65b9697c8b-6zpr5 1/1 Running 4 6d5h

onap dev-msb-discovery-54b76c4898-tsv8t 2/2 Running 8 6d5h

onap dev-msb-eag-76d4b9b9d7-crbzf 2/2 Running 8 6d5h

onap dev-msb-iag-65c59cb86b-nt66m 2/2 Running 8 6d5h

onap dev-sdnc-0 1/2 Running 8 6d5h

onap dev-sdnc-db-0 0/1 Running 4 6d5h

onap dev-sdnc-dmaap-listener-5c77848759-zq4mp 0/1 Init:1/2 0 6d5h

onap dev-sdnc-sdnrdb-init-job-lztrp 0/1 Completed 0 6d5h

onap dev-sdnrdb-coordinating-only-9b9956fc-59gcd 2/2 Running 8 6d5h

onap dev-sdnrdb-master-0 1/1 Running 4 6d5h

onap dev-sdnrdb-master-1 1/1 Running 4 6d5h

onap dev-sdnrdb-master-2 1/1 Running 4 6d5h

Pavan Gupta

Did you run the install script under ~/dep/tools/onap directory. In Cherry release, onap is installed separately.

Cedric Morin

Hi,

thank you for your response.

Yes we ran the script you mention, but it systematically caused problems to other components. For example, in your outpout, is "onap dev-sdnc-dmaap-listener-5c77848759-zq4mp 0/1 Init:1/2 0 6d5h" an expected behaviour ? shouldn't it be running, with 1/1 Init ?

Pavan Gupta

Ideally it should be running 1/1. have u tried a totally fresh installation, clean up everything and then run. It has helped me in past. I create the cluster in a VM.

Cedric Morin

Hi,

Thank you for your answer. We actually managed to properly install the SMO cherry using a later very useful comment in the SMO installation page.

The alarm demo still doesn't work for us however, but this time it is due to the RIC cherry ; the alarm-related pod doesn't seem to exist anymore. Its seems to be a known problem.

Daniel Camps Mur

Dear all,

I am having a problem trying to connect SMO and nrtRIC VMs for the Bronze release. I deployed SMO and RIC following instructions in VMs I have in a private cloud, and now I am trying to redeploy nrt-ric in SMO to be able to connect to the VM where the RIC is deployed.

These are the steps I am following:

1) In SMO: run ./__config-ip.sh in "dep/demos/bronze" to reconfigure recipes with the correct IP of the RIC

2) The recipe that is reconfigured is the one living in: "dep/nonrtric/RECIPE_EXAMPLE/example_recipe.yaml"

3) After updating the recipe, I redeploy non-rtric doing:

dep/smo/bin/smo-deploy/smo-dep/bin# sudo ./undeploy-nonrtric && sudo ./deploy-nonrtric -f /root/dep/nonrtric/RECIPE_EXAMPLE/example_recipe.yaml

4) After redeploying I see one service (a1-sim-std2-0) in the nonrtric namespace not transitioning to Running state:

nonrtric a1-sim-osc-0 1/1 Running 0 18m

nonrtric a1-sim-osc-1 1/1 Running 0 18m

nonrtric a1-sim-std-0 1/1 Running 0 18m

nonrtric a1-sim-std-1 1/1 Running 0 18m

nonrtric a1-sim-std2-0 0/1 CrashLoopBackOff 8 18m

nonrtric a1controller-64c4f59fb5-m78sm 1/1 Running 0 18m

nonrtric controlpanel-59d48b98b-h64qf 1/1 Running 0 18m

nonrtric db-549ff9b4d5-hvpkr 1/1 Running 0 18m

nonrtric enrichmentservice-8578855744-sg7vg 1/1 Running 0 18m

nonrtric nonrtricgateway-6478f59b66-tctd5 1/1 Running 0 18m

nonrtric policymanagementservice-64d4f4db9b-zmfhj 1/1 Running 0 18m

nonrtric rappcatalogueservice-5945c4d84b-m8sc4 1/1 Running 0 18m

5) I try to verify connected RICs doing: curl -s http://$(hostname):30093/rics | jq . . But I cannot see the configured RIC. This is what I get:

curl -s http://$(hostname):30093/rics | jq .

{

"timestamp": "2021-02-16T14:56:30.317+00:00",

"path": "/rics",

"status": 404,

"error": "Not Found",

"message": null,

"requestId": "682c56e7-3"

}

6) I verify that port 32080 is open in the remote RIC

nmap 192.168.115.217 -p 32080

Starting Nmap 7.60 ( https://nmap.org ) at 2021-02-16 14:57 UTC

Nmap scan report for 192.168.115.217

Host is up (0.00074s latency).

PORT STATE SERVICE

32080/tcp open unknown

MAC Address: FA:16:3E:A5:08:A9 (Unknown)

Nmap done: 1 IP address (1 host up) scanned in 0.67 seconds

7) I verify that 32080 is indeed the kong port in the RIC server:

kubectl get service -n ricplt r4-infrastructure-kong-proxy -o json | jq '.spec.ports[] | select (.name == "kong-proxy") | .nodePort'

32080

Any idea about what am I missing is appreciated.

BR

Daniel

Daniel Camps Mur

Hi all,

Some additional information.

I am monitoring the logs of the a1mediator service in the RIC VM while re-deploying the nonrtRIC in the SMO VM, but I see not indication that the NONRTRIC is trying to connect. This makes me think that there is something wrong with the recipe I use. I paste below the nonrtric deployment recipe and the logs observed in the a1mediator service.

The IP address of the RIC VM in the recipe is 192.168.115.217. The NONRTRIC is deployed using the following scripts:

sudo ./undeploy-nonrtric && sudo ./deploy-nonrtric -f /root/dep/nonrtric/RECIPE_EXAMPLE/example_recipe.yaml

BR

Daniel

***** Recipe used to deploy nonrtric:START (space indentation not preserved)******

#-------------------------------------------------------------------------

# Global common setting

#-------------------------------------------------------------------------

common:

releasePrefix: r2-dev-nonrtric

# Change the namespaces using the following options

namespace:

nonrtric: nonrtric

component: nonrtric

# A1 Conttroller may take few more minutes to start. Increase the initialDelaySeconds in liveness to avoid container restart.

a1controller:

a1controller:

imagePullPolicy: IfNotPresent

image:

registry: 'nexus3.o-ran-sc.org:10002/o-ran-sc'

name: nonrtric-a1-controller

tag: 2.0.1

replicaCount: 1

service:

allowHttp: true

httpName: http

internalPort1: 8282

targetPort1: 8181

httpsName: https

internalPort2: 8383

targetPort2: 8443

liveness:

initialDelaySeconds: 300

periodSeconds: 10

readiness:

initialDelaySeconds: 60

periodSeconds: 10

a1simulator:

a1simulator:

name: a1-sim

imagePullPolicy: IfNotPresent

image:

registry: 'nexus3.o-ran-sc.org:10002/o-ran-sc'

name: a1-simulator

tag: 2.1.0

service:

allowHttp: true

httpName: http

internalPort1: 8085

targetPort1: 8085

httpsName: https

internalPort2: 8185

targetPort2: 8185

liveness:

initialDelaySeconds: 20

periodSeconds: 10

readiness:

initialDelaySeconds: 20

periodSeconds: 10

oscVersion:

name: a1-sim-osc

replicaCount: 2

stdVersion:

name: a1-sim-std

replicaCount: 2

stdVersion2:

name: a1-sim-std2

replicaCount: 2

controlpanel:

controlpanel:

imagePullPolicy: IfNotPresent

image:

registry: 'nexus3.o-ran-sc.org:10004/o-ran-sc'

name: nonrtric-controlpanel

tag: 2.2.0

replicaCount: 1

service:

allowHttp: true

httpName: http

internalPort1: 8080

targetPort1: 8080

externalPort1: 30091

httpsName: https

internalPort2: 8081

targetPort2: 8082

externalPort2: 30092

liveness:

initialDelaySeconds: 20

periodSeconds: 10

readiness:

initialDelaySeconds: 20

periodSeconds: 10

# Need to check the external port Availability

policymanagementservice:

policymanagementservice:

imagePullPolicy: IfNotPresent

image:

registry: 'nexus3.o-ran-sc.org:10004/o-ran-sc'

name: nonrtric-policy-agent

tag: 2.2.0

service:

allowHttp: true

httpName: http

internalPort1: 9080

targetPort1: 8081

httpsName: https

internalPort2: 9081

targetPort2: 8433

liveness:

initialDelaySeconds: 20

periodSeconds: 10

readiness:

initialDelaySeconds: 20

periodSeconds: 10

ric: |

[

{

"name":"ric1",

"baseUrl":"http://192.168.115.217:32080/a1mediator",

"controller": "controller1",

"managedElementIds":

[

"kista_1",

"kista_2"

]

}

]

streams_publishes: |

{

"dmaap_publisher": {

"type":"message_router",

"dmaap_info":{

"topic_url":"http://message-router.onap:3904/events/A1-POLICY-AGENT-WRITE"

}

}

}

streams_subscribes: |

{

"dmaap_subscriber":{

"type":"message_router",

"dmaap_info":{

"topic_url":"http://message-router.onap:3904/events/A1-POLICY-AGENT-READ/users/policy-agent?timeout=15000&limit=100"

}

}

}

enrichmentservice:

enrichmentservice:

imagePullPolicy: IfNotPresent

image:

registry: 'nexus3.o-ran-sc.org:10002/o-ran-sc'

name: 'nonrtric-enrichment-coordinator-service'

tag: 1.0.0

service:

allowHttp: true

httpName: http

internalPort1: 9082

targetPort1: 8083

httpsName: https

internalPort2: 9083

targetPort2: 8434

liveness:

initialDelaySeconds: 20

periodSeconds: 10

readiness:

initialDelaySeconds: 20

periodSeconds: 10

persistence:

enabled: true

volumeReclaimPolicy: Retain

accessMode: ReadWriteOnce

size: 2Gi

mountPath: /dockerdata-nfs

mountSubPath: nonrtric/enrichmentservice

rappcatalogueservice:

rappcatalogueservice:

imagePullPolicy: IfNotPresent

image:

registry: 'nexus3.o-ran-sc.org:10002/o-ran-sc'

name: nonrtric-r-app-catalogue

tag: 1.0.0

service:

allowHttp: true

httpName: http

internalPort1: 9085

targetPort1: 8080

httpsName: https

internalPort2: 9086

targetPort2: 8433

liveness:

initialDelaySeconds: 20

periodSeconds: 10

readiness:

initialDelaySeconds: 20

periodSeconds: 10

nonrtricgateway:

nonrtricgateway:

imagePullPolicy: IfNotPresent

image:

registry: 'nexus3.o-ran-sc.org:10004/o-ran-sc'

name: nonrtric-gateway

tag: 0.0.1

service:

httpName: http

internalPort1: 9090

targetPort1: 9090

externalPort1: 30093

liveness:

initialDelaySeconds: 20

periodSeconds: 10

readiness:

initialDelaySeconds: 20

periodSeconds: 10

***** Recipe used to deploy nonrtric:END******

***** RIC a1mediator logs while redeploying nonrtRIC: START ******

::ffff:10.244.0.1 - - [2021-02-17 14:56:46] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.002205

::ffff:10.244.0.1 - - [2021-02-17 14:56:55] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.002354

::ffff:10.244.0.1 - - [2021-02-17 14:56:56] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.002225

::ffff:10.244.0.1 - - [2021-02-17 14:57:05] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.002922

::ffff:10.244.0.1 - - [2021-02-17 14:57:06] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.002342

::ffff:10.244.0.1 - - [2021-02-17 14:57:15] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.002730

::ffff:10.244.0.1 - - [2021-02-17 14:57:16] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.002335

1613573845 1/RMR [INFO] sends: ts=1613573845 src=service-ricplt-a1mediator-rmr.ricplt:4562 target=service-ricxapp-admctrl-rmr.ricxapp:4563 open=0 succ=0 fail=0 (hard=0 soft=0)

1613573845 1/RMR [INFO] sends: ts=1613573845 src=service-ricplt-a1mediator-rmr.ricplt:4562 target=service-ricplt-submgr-rmr.ricplt:4560 open=0 succ=0 fail=0 (hard=0 soft=0)

1613573845 1/RMR [INFO] sends: ts=1613573845 src=service-ricplt-a1mediator-rmr.ricplt:4562 target=service-ricplt-e2mgr-rmr.ricplt:3801 open=0 succ=0 fail=0 (hard=0 soft=0)

::ffff:10.244.0.1 - - [2021-02-17 14:57:25] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.002635

::ffff:10.244.0.1 - - [2021-02-17 14:57:26] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.004832

::ffff:10.244.0.1 - - [2021-02-17 14:57:35] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.002423

::ffff:10.244.0.1 - - [2021-02-17 14:57:36] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.002182

::ffff:10.244.0.1 - - [2021-02-17 14:57:45] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.002494

::ffff:10.244.0.1 - - [2021-02-17 14:57:46] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.002327

::ffff:10.244.0.1 - - [2021-02-17 14:57:55] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.002973

::ffff:10.244.0.1 - - [2021-02-17 14:57:56] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.002124

::ffff:10.244.0.1 - - [2021-02-17 14:58:05] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.002292

::ffff:10.244.0.1 - - [2021-02-17 14:58:06] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.001835

::ffff:10.244.0.1 - - [2021-02-17 14:58:15] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.001771

::ffff:10.244.0.1 - - [2021-02-17 14:58:16] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.001660

::ffff:10.244.0.1 - - [2021-02-17 14:58:25] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.002843

::ffff:10.244.0.1 - - [2021-02-17 14:58:26] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.001842

::ffff:10.244.0.1 - - [2021-02-17 14:58:35] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.002164

::ffff:10.244.0.1 - - [2021-02-17 14:58:36] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.001960

::ffff:10.244.0.1 - - [2021-02-17 14:58:45] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.003129

::ffff:10.244.0.1 - - [2021-02-17 14:58:46] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.002876

::ffff:10.244.0.1 - - [2021-02-17 14:58:55] "GET /a1-p/healthcheck HTTP/1.1" 200 110 0.003377

***** RIC a1mediator logs while redeploying nonrtRIC: END ******

Daniel Camps Mur

Just one more note. These are the services configured in the nonrttic and ricplt clusters:

********* NONRTIC (SMO VM)

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

a1-sim ClusterIP None <none> 8085/TCP,8185/TCP 30m

a1controller ClusterIP 10.98.181.196 <none> 8282/TCP,8383/TCP 30m

controlpanel NodePort 10.107.216.213 <none> 8080:30091/TCP,8081:30092/TCP 30m

dbhost ClusterIP 10.103.168.176 <none> 3306/TCP 30m

enrichmentservice ClusterIP 10.103.28.246 <none> 9082/TCP,9083/TCP 30m

nonrtricgateway NodePort 10.97.154.116 <none> 9090:30093/TCP 30m

policymanagementservice ClusterIP 10.110.226.143 <none> 9080/TCP,9081/TCP 30m

rappcatalogueservice ClusterIP 10.111.36.207 <none> 9085/TCP,9086/TCP 30m

sdnctldb01 ClusterIP 10.107.50.176 <none> 3306/TCP 30m

****** RICPLT (RIC VM)

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

aux-entry ClusterIP 10.97.240.110 <none> 80/TCP,443/TCP 45h

r4-infrastructure-kong-proxy NodePort 10.108.152.115 <none> 32080:32080/TCP,32443:32443/TCP 45h

r4-infrastructure-prometheus-alertmanager ClusterIP 10.100.110.77 <none> 80/TCP 45h

r4-infrastructure-prometheus-server ClusterIP 10.103.46.114 <none> 80/TCP 45h

service-ricplt-a1mediator-http ClusterIP 10.107.190.106 <none> 10000/TCP 45h

service-ricplt-a1mediator-rmr ClusterIP 10.110.46.20 <none> 4561/TCP,4562/TCP 45h

service-ricplt-alarmadapter-http ClusterIP 10.96.157.84 <none> 8080/TCP 45h

service-ricplt-alarmadapter-rmr ClusterIP 10.98.164.11 <none> 4560/TCP,4561/TCP 45h

service-ricplt-appmgr-http ClusterIP 10.104.254.185 <none> 8080/TCP 45h

service-ricplt-appmgr-rmr ClusterIP 10.98.44.172 <none> 4561/TCP,4560/TCP 45h

service-ricplt-dbaas-tcp ClusterIP None <none> 6379/TCP 45h

service-ricplt-e2mgr-http ClusterIP 10.102.62.179 <none> 3800/TCP 45h

service-ricplt-e2mgr-rmr ClusterIP 10.110.83.210 <none> 4561/TCP,3801/TCP 45h

service-ricplt-e2term-rmr-alpha ClusterIP 10.100.0.185 <none> 4561/TCP,38000/TCP 45h

service-ricplt-e2term-sctp-alpha NodePort 10.105.109.56 <none> 36422:32222/SCTP 45h

service-ricplt-jaegeradapter-agent ClusterIP 10.106.239.65 <none> 5775/UDP,6831/UDP,6832/UDP 45h

service-ricplt-jaegeradapter-collector ClusterIP 10.99.188.31 <none> 14267/TCP,14268/TCP,9411/TCP 45h

service-ricplt-jaegeradapter-query ClusterIP 10.102.252.67 <none> 16686/TCP 45h

service-ricplt-o1mediator-http ClusterIP 10.105.42.78 <none> 9001/TCP,8080/TCP,3000/TCP 45h

service-ricplt-o1mediator-tcp-netconf NodePort 10.105.244.59 <none> 830:30830/TCP 45h

service-ricplt-rtmgr-http ClusterIP 10.105.216.9 <none> 3800/TCP 45h

service-ricplt-rtmgr-rmr ClusterIP 10.99.18.241 <none> 4561/TCP,4560/TCP 45h

service-ricplt-submgr-http ClusterIP None <none> 3800/TCP 45h

service-ricplt-submgr-rmr ClusterIP None <none> 4560/TCP,4561/TCP 45h

service-ricplt-vespamgr-http ClusterIP 10.109.203.38 <none> 8080/TCP,9095/TCP 45h

service-ricplt-xapp-onboarder-http ClusterIP 10.100.168.225 <none> 8888/TCP,8080/TCP 45h

Pavan Gupta

Hi,

If you have found a solution for this kindly share the same. We too experienced the same issue, but couldnt get to resolve it.

LUKAI

When will the O1 flow be added?

Daniel Camps Mur

Hi all,

I encountered a couple of problems when validating the A1 workflow with the Bronze release that I would like to share with you.

1) There is an error when onboarding the QPD xApp:

{

"error_source": "config-file.json",

"error_message": "'__empty_control_section__' is a required property",

"status": "Input payload validation failed"

}

2) There is an error when pulling the of the docker container that fills the database with RAN data for the TS xApp

===> Inject DBaaS (RNIB) with testing data for Traffic Steering test

Cloning into 'ts'...

warning: redirecting to https://gerrit.o-ran-sc.org/r/ric-app/ts/

remote: Counting objects: 6, done

remote: Total 297 (delta 0), reused 297 (delta 0)

Receiving objects: 100% (297/297), 131.94 KiB | 229.00 KiB/s, done.

Resolving deltas: 100% (141/141), done.

/tmp/tsflow-202104120928 /tmp/tsflow-202104120928

Error: release: "dbprepop" not found

Sending build context to Docker daemon 19.97kB

Step 1/17 : FROM nexus3.o-ran-sc.org:10004/o-ran-sc/bldr-ubuntu18-c-go:8-u18.04 as buildenv

manifest for nexus3.o-ran-sc.org:10004/o-ran-sc/bldr-ubuntu18-c-go:8-u18.04 not found: manifest unknown: manifest unknown

NAME: dbprepop

LAST DEPLOYED: Mon Apr 12 09:31:24 2021

NAMESPACE: ricplt

STATUS: DEPLOYED

RESOURCES:

==> v1/Job

NAME COMPLETIONS DURATION AGE

dbprepopjob 0/1 1s 1s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

dbprepopjob-4xwsj 0/1 ContainerCreating 0 1s

/tmp/tsflow-202104120928

RNIB populated. The Traffic Steering xApp's log should inicate that it is reading RAN data. When ready for the next step,

BR

Daniel

anjan goswami

Hi All

In the bronze release while I was trying to run A1 flow demo use case between RIC & nonRTRIC. I am encountering error in below segment. The code snippet is from a1-ric.sh

# populate DB

echo && echo "===> Inject DBaaS (RNIB) with testing data for Traffic Steering test"

if [ ! -e ts ]; then

git clone http://gerrit.o-ran-sc.org/r/ric-app/ts [Note i have also tried explicitly mentioning bronze branch too]

fi

pushd "$(pwd)"

cd ts/test/populatedb

./populate_db.sh

popd

acknowledge "RNIB populated. The Traffic Steering xApp's log should inicate that it is reading RAN data. When ready for the next step, "

===> This is giving below error while running

===> Inject DBaaS (RNIB) with testing data for Traffic Steering test

Cloning into 'ts'...

warning: redirecting to https://gerrit.o-ran-sc.org/r/ric-app/ts/

remote: Counting objects: 6, done

remote: Total 297 (delta 0), reused 297 (delta 0)

Receiving objects: 100% (297/297), 131.94 KiB | 2.03 MiB/s, done.

Resolving deltas: 100% (141/141), done.

/tmp/tsflow-202104221803 /tmp/tsflow-202104221803

release "dbprepop" deleted

Sending build context to Docker daemon 19.97kB

Step 1/17 : FROM nexus3.o-ran-sc.org:10004/o-ran-sc/bldr-ubuntu18-c-go:8-u18.04 as buildenv

manifest for nexus3.o-ran-sc.org:10004/o-ran-sc/bldr-ubuntu18-c-go:8-u18.04 not found: manifest unknown: manifest unknown

NAME: dbprepop

LAST DEPLOYED: Thu Apr 22 18:07:24 2021

NAMESPACE: ricplt

STATUS: DEPLOYED

There seems to be some issue with the tag as if i make manual docker pull, i do get the same error. I tried various tags as mentioned in below URLs but it doesn't seem to resolve the issue.

ORAN Base Docker Images for CI Builds - O-RAN SC - Confluence (o-ran-sc.org)

https://blog.csdn.net/jeffyko/article/details/107872740

The only manifest which seem to work is below, though it later fails to populate the DB.

root@nearrtric:/tmp/anj_non_crash/ts/tn# docker pull nexus3.o-ran-sc.org:10002/o-ran-sc/bldr-ubuntu18-c-go:1.9.0

root@nearrtric:/tmp/anj_non_crash/ts# docker build -t ric-app-ts:1.0.11 .

Sending build context to Docker daemon 355.3kB

Step 1/39 : FROM nexus3.o-ran-sc.org:10002/o-ran-sc/bldr-ubuntu18-c-go:1.9.0 as buildenv

1.9.0: Pulling from o-ran-sc/bldr-ubuntu18-c-go

root@nearrtric:/tmp/anj_non_crash/ts/test/populatedb# sudo kubectl get jobs -A

NAMESPACE NAME COMPLETIONS DURATION AGE

ricinfra tiller-secret-generator 1/1 5s 2d

ricplt dbprepopjob 0/1 44s 44s

root@nearrtric:/tmp/anj_non_crash/ts/test/populatedb# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

ricplt dbprepopjob-q8xpz 0/1 ErrImagePull 0 55s

root@nearrtric:/tmp/anj_non_crash/ts/test/populatedb# docker images | grep ubuntu18

nexus3.o-ran-sc.org:10002/o-ran-sc/bldr-ubuntu18-c-go 1.9.0 cdcad96bd128 4 months ago 2.17GB

root@nearrtric:/tmp/anj_non_crash/ts# docker images | grep ric-app-ts

ric-app-ts 1.0.11 8e16e5ea65f9 28 seconds ago 110MB

nexus3.o-ran-sc.org:10002/o-ran-sc/ric-app-ts 1.0.13 a6befe55b1e8 4 months ago 109MB

Any help would be appreciated.

anjan goswami

I resolved the issue in following way,

git clone http://gerrit.o-ran-sc.org/r/ric-app/ts.git -b bronze

There will be two docker file (Dockerfile , test/populatedb/Dockerfile), use the below tag instead.

FROM nexus3.o-ran-sc.org:10002/o-ran-sc/bldr-ubuntu18-c-go:1.9.0 as buildenv

Also in "test/populatedb/Dockerfile" add below line to avoid python fetch problem.

ENV LD_LIBRARY_PATH=/usr/local/lib

-

+RUN apt-get update && apt-get install -y apt-transport-https

RUN apt-get install -y cpputest

Get:123 http://archive.ubuntu.com/ubuntu bionic/universe amd64 libboost-wave-dev amd64 1.65.1.0ubuntu1 [2952 B]

Get:124 http://archive.ubuntu.com/ubuntu bionic/universe amd64 libboost-all-dev amd64 1.65.1.0ubuntu1 [2340 B]

E: Failed to fetch http://security.ubuntu.com/ubuntu/pool/main/p/python3.6/libpython3.6_3.6.9-1~18.04ubuntu1.3_amd64.deb 404 Not Found [IP: 91.189.88.142 80]

EFetched 19.7 MB in 5s (3809 kB/s)

: Failed to fetch http://security.ubuntu.com/ubuntu/pool/main/p/python3.6/libpython3.6-dev_3.6.9-1~18.04ubuntu1.3_amd64.deb 404 Not Found [IP: 91.189.88.142 80]

E: Failed to fetch http://security.ubuntu.com/ubuntu/pool/main/p/python3.6/python3.6-dev_3.6.9-1~18.04ubuntu1.3_amd64.deb 404 Not Found [IP: 91.189.88.142 80]

E: Unable to fetch some archives, maybe run apt-get update or try with --fix-missing?

The command '/bin/sh -c apt-get install -y libboost-all-dev' returned a non-zero code: 100

Hope it helps someone.

Junior Salem

Hello expert,

i got some error,

ricxapp-trafficxapp-58d4946955-86cxr 0/1 ErrImagePull 0 41s

did anyone have some issue?

Br,

Junior

azsx9015223@gmail.com

Hi all, I am using near RT-RIC in cherry release.

And I find this error in "e2-mgr" log

{"crit":"ERROR","ts":1626432651814,"id":"E2Manager","msg":"#RmrSender.Send - RAN name: , Message type: 1101 - Failed sending message. Error: #rmrCgoApi.SendMsg - Failed to send message. state: 2 - send/call could not find an endpoint based on msg type","mdc":{"time":"2021-07-16 10:50:51.814"}}

Can anyone help me to understand what this error means ?

Thanks a lot !

RAGHU KOTA

Using the code from the master repo i was trying to test the alarm interface as explained in "https://wiki.o-ran-sc.org/download/attachments/20875214/o1.mp4?version=1&modificationDate=1593573258294&api=v2" here. I see that now additional parameters are introduced and it is not working as expected.

Is there anyway i can test end to end alarm generation ?

Anonymous

Hi, all

I have installed Near-RT RIC, and tried to run odu-high.sh

After setting RIC host and odu host, and code compilation, I stuck in the process as below, do anyone can help to solve it?

(Are these two errors are resulted from the codes which try to connect to O-CU and other component, as mentioned in odu-high demo video ?)

Received Scheduler gen config at MAC

INFO --> SCH : Received scheduler gen config

DEBUG --> SCH : Entered SchInstCfg()

INFO --> SCH : Scheduler gen config done

DEBUG --> Sending Scheduler config confirm to DU APP

DEBUG --> DU_APP : Received SCH CFG CFM at DU APP

INFO --> DU_APP : Configuring all Layer is complete

CmInetSctpConnectx() Failed : error(115), port(0x9648), sockFd->fd(4)

INFO --> SCTP : Polling started at DU

CmInetSctpConnectx() Failed : error(115), port(0x7dde), sockFd->fd(5)

DEBUG --> DU_APP : Sending EGTP config request

DEBUG --> EGTP : EGTP configuration successful

DEBUG --> SCTP : Assoc change notification receivedINFO --> Event : CANT START ASSOC

DEBUG --> SCTP : Forwarding received message to duApp

DEBUG --> SCTP : AssocId assigned to F1Params from PollParams [25]

Preethika Prathaban

Hi All,

Have installed Near RT RIC Cherry version. Trying to experiment E2 interface following the video link attached in this page.

Upon executing odu high could see SCTP communication up and a response message. However in e2mgr the following error is observed.

ERROR:

"{"crit":"ERROR","ts":1629713862158,"id":"E2Manager","msg":"#RmrSender.Send - RAN name: , Message type: 1101 - Failed sending message. Error: #rmrCgoApi.SendMsg - Failed to send message. state: 2 - send/call could not find an endpoint based on msg type","mdc":{"time":"2021-08-23 10:17:42.158"}}"

and on checking the list of nodebs available in e2mgr, it shows status as "disconnected".

Also need reference links that explain communication between xapps to E2 node with usecases.

Help is much appreciated!!

mhz

Hello,

How O-RAN software will be used once it is fully installed?

Is there any documentation on how to manipulate O-RAN functionalities?

Rajesh Kumar

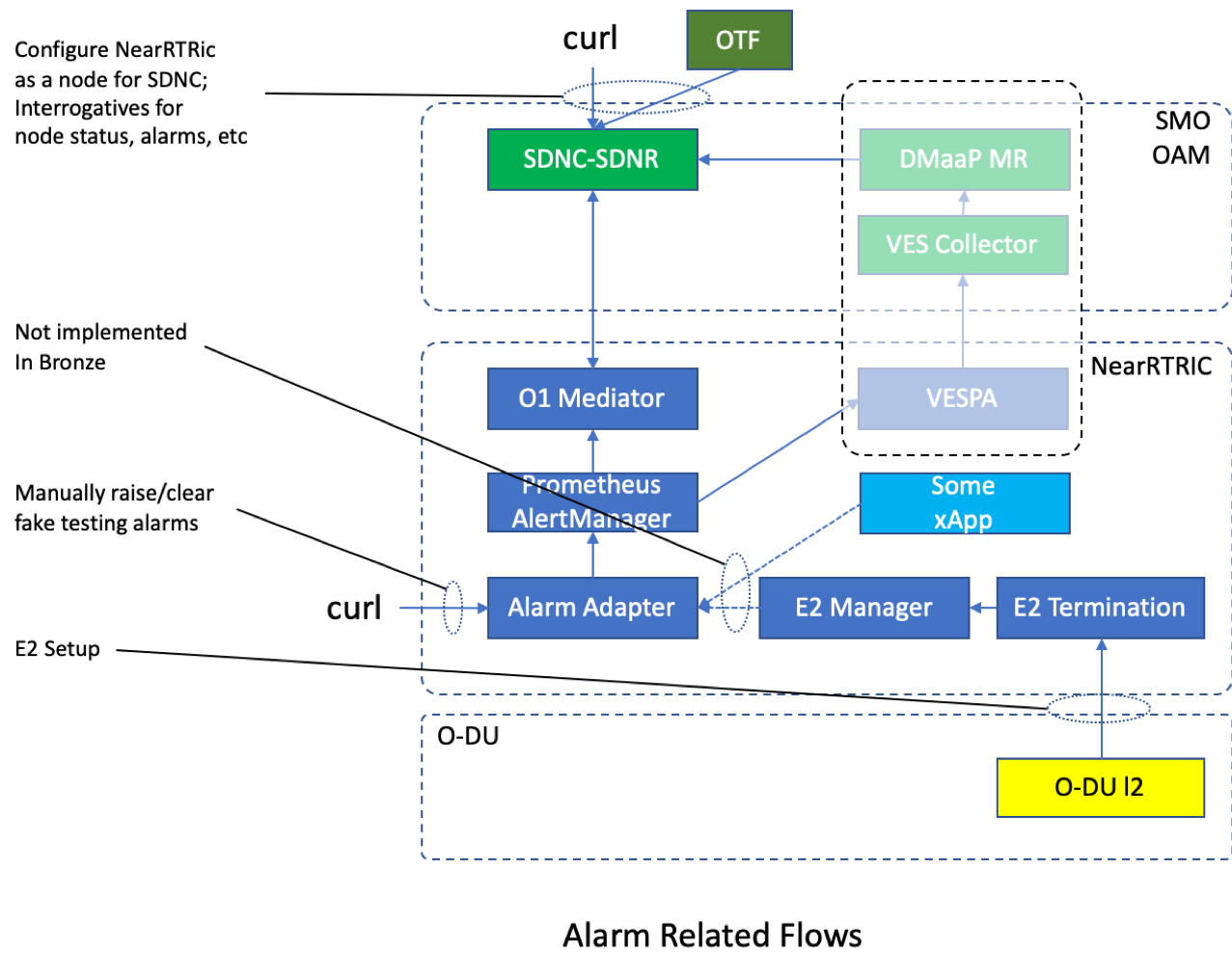

Hi All,

does any one explored the alarm management use case using o1 mediator and SDNC-SDNR. I installed near-RT RIC platform and able to receive alarms in DMAAP through VES-Agent. but I want to explore more about O1 mediator flow. please let me know how it works and how to test this use case. Thanks

Mustafa Luqa

The command seems to be incorrect. For anyone having an error reply to the Curl command, you can try:

curl -v -X PUT --header "Content-Type: application/json" "http://$(hostname):30093/policy?id=FROMOTF&ric=ric1&service=dummyService&type=${POLICY_TYPE_ID}" --data "{\"threshold\" : 1}"

Rajesh Kumar

Hi Team, can someone let me know how to configure nearRT RIC in SDNC?

Chandra Shekar Telagamaneni

Hi Team,

could you please tell what are the API's we can use with curl command for o1 mediator?

or

is there any file in o1 mediator repo which we can take reference of to do API calls?

Thanks a lot