Purpose

Provide environment for End-to-End testing and demonstration of MVP-C Features from O-RAN.

Status

O-DU Server 192.168.50.191 is not networked correctly. It has limited availability/use.

Access

Lab Access Request Instructions

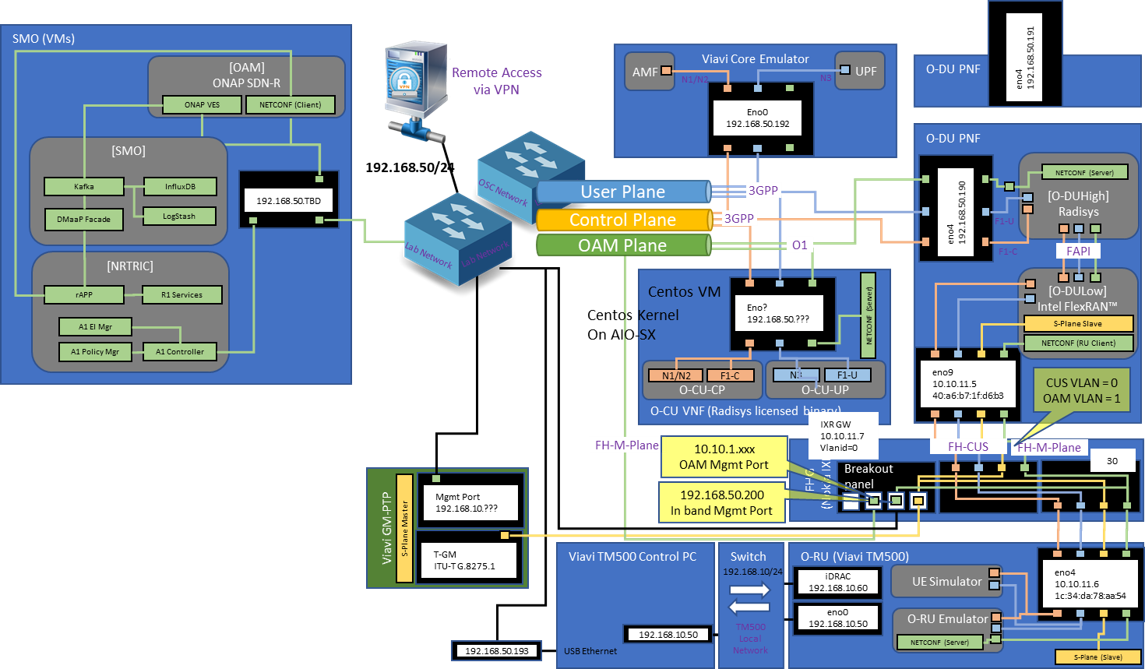

Diagram

Editable Lab Diagram (Powerpoint)

NJ Lab Elements

Lab Networks

Elements in the lab use various networks for both developer access and for operational end-to-end integrations. The following table describe the various lab networks.

Network | Subnet | VLAN ID | Port Range | Purpose |

LabNet | 192.168.50/24 | Odd | General purpose server access network used for project developers to develop, install, and test new contributions. This network is also used for the O2 interface. | |

iDRAC | 192.168.4/24 | LabNet+1 | Out-of-Band management network used to remotely access servers that are no longer accessible due to configuration or power. | |

OAM Plane | 10.0.1/24 | 21 | 1-16 | Network used for communications between the SMO and the network function for configuration and data collection (O1, A1, FH-MPlane) |

Control Plane | 10.0.2/24 | 22 | 17-32 | Network Control interface for communications between network control functions. |

User Plane | 10.0.3/24 | 23 | 33-48 | Network for passing user plane data through the network. |

Custom | Custom | Custom | Custom | These networks are on specialty functions such as the CPRI or eCPRI interface between the O-DU and the O-RU. |

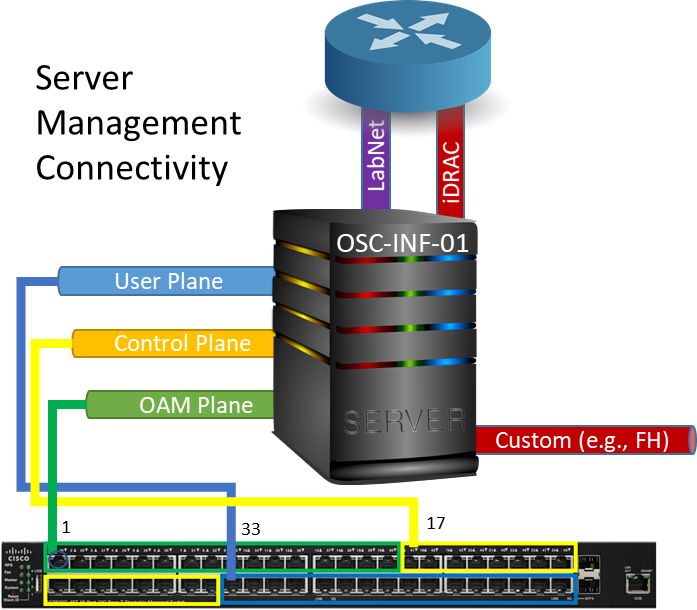

Physical Servers

Physical infrastructure within the labs is allocated to an OSC project and is administrated by the PTL or delegated to an individual on the team. Having access to the lab does not equate to access to a server. Individuals are expected to have unique logins to servers such that traceability of activities on the server can be done when errant behavior is detected. To gain access to a server, contact the PTL for the server.

Since lab resources are often reassigned or reused for new functions, they are built according to a template. This allows repurposing without always requiring a lab change request. The following figure shows the two general pattern for server deployments.

The server is configured with all networks, even if not currently needed. Server host are named sequentially when provisioned. Likewise, the ports for the 3 data networks are aligned with their correlative port assignments. IP assignment is also correlated, 10 is added to the number in the host server name to identify the last IP value. For the illustrative example the OSC-INF-01 server has an OAM IP of 10.0.1.11, the Control IP of 10.0.2.11, and a User IP of 10.0.3.11.

Virtual Machines

The New Jersey lab also uses Openstack Virtual Machines for the SMO, OAM, and Non-RT RIC projects. These machines are connected to both the LabNet and OAM network. Since they are the SMO components they do not need access to the Control and User networks.

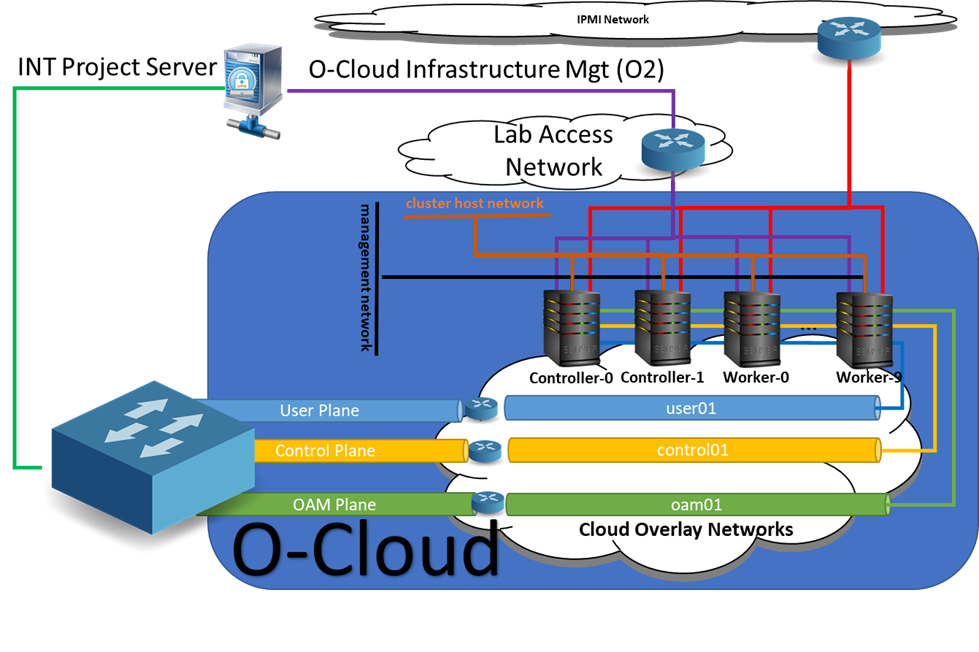

O-Clouds

The Infrastructure (INF) project builds the O-Clouds. These can be used to deploy on through the O2 interface. An independent Cloud Control Plane network does not exist. Instead the LabNet is used to connect to the O2ims and O2dms endpoints.

The O-Cloud is intended, when complete to support 3 different clusters patterns. The Simplex, Duplex, and Duplex patterns are defined more deeply on the project wiki: https://wiki.o-ran-sc.org/display/IN/INF+Project+Hardware+Requirements. The generalized pattern is shown in the figure below.

The figure also depicts the Integration (INT) Project Server. This server host the INT Open Test Framework (OTF) in which all projects can use to create automated test for unit/feature testing, pairwise testing, end-to-end test/demonstrations. It can also execute a suite of tests such as Release and Certification tests.

Software Deployment Allocations

Service Management & Orchestration (SMO)

As previously noted the SMO, OAM, and Non-RT RIC including rApps run on VMs. We are currently exploring the idea of deploying this on the O-Cloud Duplex Cluster.

O-DU (High and Low)

The O-DU is a physical server with specialty hardware from Intel to run the FlexRan software as the ODU-Low. The ODU-High is deployed on the server manually and launched. The O-DU has a custom interface through a Fronthaul Gateway to an O-RU simulator.

O-CU

The O-CU is being converted from a monolithic application on a Centos VM to a bare metal container-based implementation. It will be deployed on the O-Cloud Simplex Cluster.

Near-RT RIC

The Near-RT RIC is currently in an isolated portion of the lab. Simulation (SIM) software is used as element functionality to support the E2 service model is not yet available. We are looking to migrate this to the O-Cloud Duplex+ Cluster in a future release.

Software Simulations (SIM)

The SIM software is currently deployed on VMs but will migrate to the O-Cloud such that either a simulated element or one under development can be placed into the integrated service path.

Hardware Simulations

Currently two hardware platforms are used as simulators. Both are from Viavi. First to the 5G Core simulator which simulates the AMF and UPF elements. The second is an O-RU simulator which can provide messages over the Fronthaul interface to the O-DU simulating UEs in a cell provided by an O-RU.

Specialty Hardware

There are two specialty devices in the lab.

- Grandmaster clock. In New Jersey we are using a Viavi device that allows us to transmit the high precision clock signal to the Fronthaul Gateway in a lab less than 100 meters away.

- Nokia IXR as a Fronthaul Gateway. The SMO needs to support O-RU monitoring and therefore the lab is configured to support the Hybrid OAM Architecture model described by O-RAN. In this model the Fronthaul Gateway provides an network gateway representing the SMO within the FH connection. This allows routing for the NETCONF session between the SMO and the O-RU through the IXR In-Band management port. The IXR also provides the native grandmaster clock signal as a pass through to both the O-DU and the O-RU providing consistent fine grain c