| Table of Contents |

|---|

Introduction

Kafka metrics can be collected and displayed using different methods.

Prometheus

Prometheus can be used to collect monitoring data from Kafka.

...

| Code Block | ||||

|---|---|---|---|---|

| ||||

kafka:

authorization:

type: simple

superUsers:

- CN=kowl

- CN=connect

...

metricsConfig:

type: jmxPrometheusExporter

valueFrom:

configMapKeyRef:

name: kafka-metrics

key: kafka-metrics-config.yml

...

zookeeper:

replicas: 1

storage:

deleteClaim: false

size: 10Mi

type: persistent-claim

metricsConfig:

type: jmxPrometheusExporter

valueFrom:

configMapKeyRef:

name: kafka-metrics

key: zookeeper-metrics-config.yml

key: zookeeper-metrics-config.yml |

...

| Code Block | ||||

|---|---|---|---|---|

| ||||

scrape_configs:

- job_name: prometheus

static_configs:

- targets:

- localhost:9090

- job_name: kafka-exporter

scrape_interval: 10s

metrics_path: /metrics

static_configs:

- targets:

- kafka-exporter.kafka:9100 |

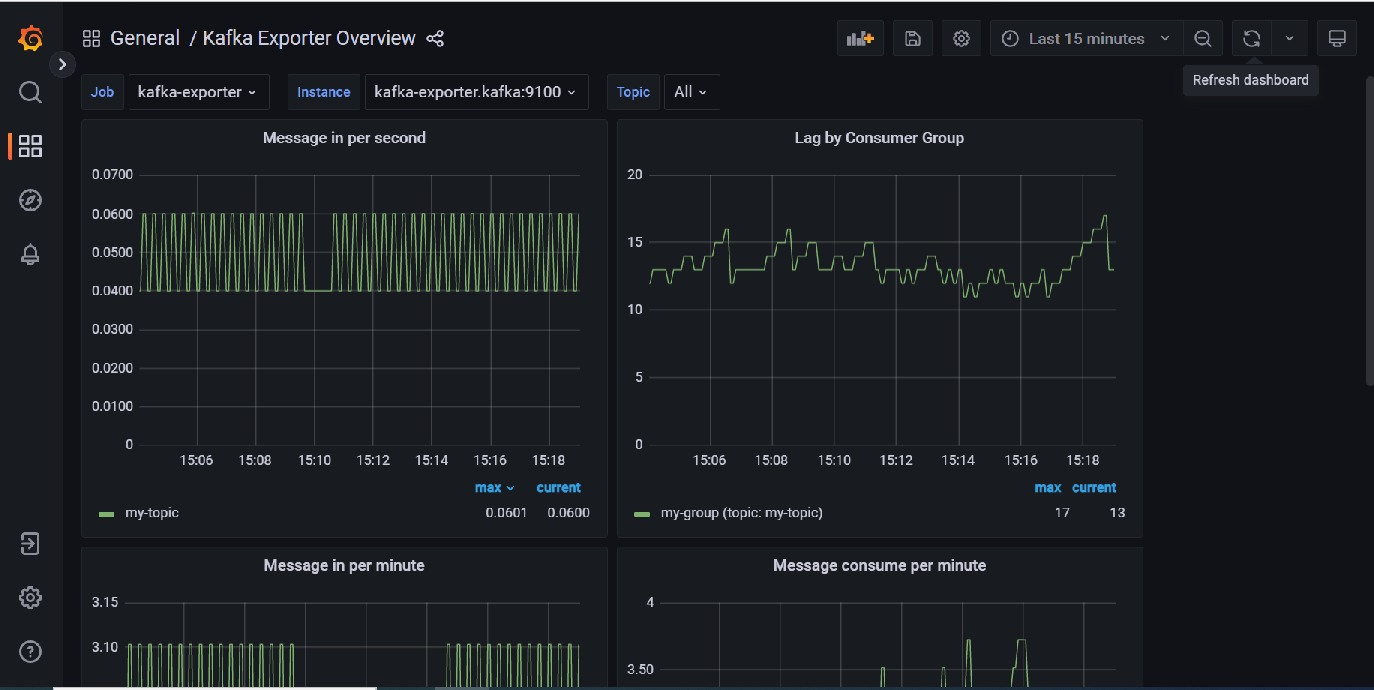

Grafana

You can then setup the grafana dashboard to view your metrics : kafka-exporter-overview_rev5.json

These metrics can also be imported into telegraf by adding the following lines to your inputs:

| Code Block | ||||

|---|---|---|---|---|

| ||||

[[inputs.prometheus]]

urls = ["http://kafka-exporter.kafka:9100/metrics"]

response_timeout = "10s" |

You can also include metrics from your Kafka bridge by adding the following line to the bridge spec: enableMetrics: true

Note: It is also possible to obtain JMX metrics using jolokia (GitHub - paksu/kafka-jolokia-telegraf-collector: Simple Kafka broker JMX metric collection with Telegraf) but this requires creating a custom a custom kafka cluster image if you are using Strimzi.

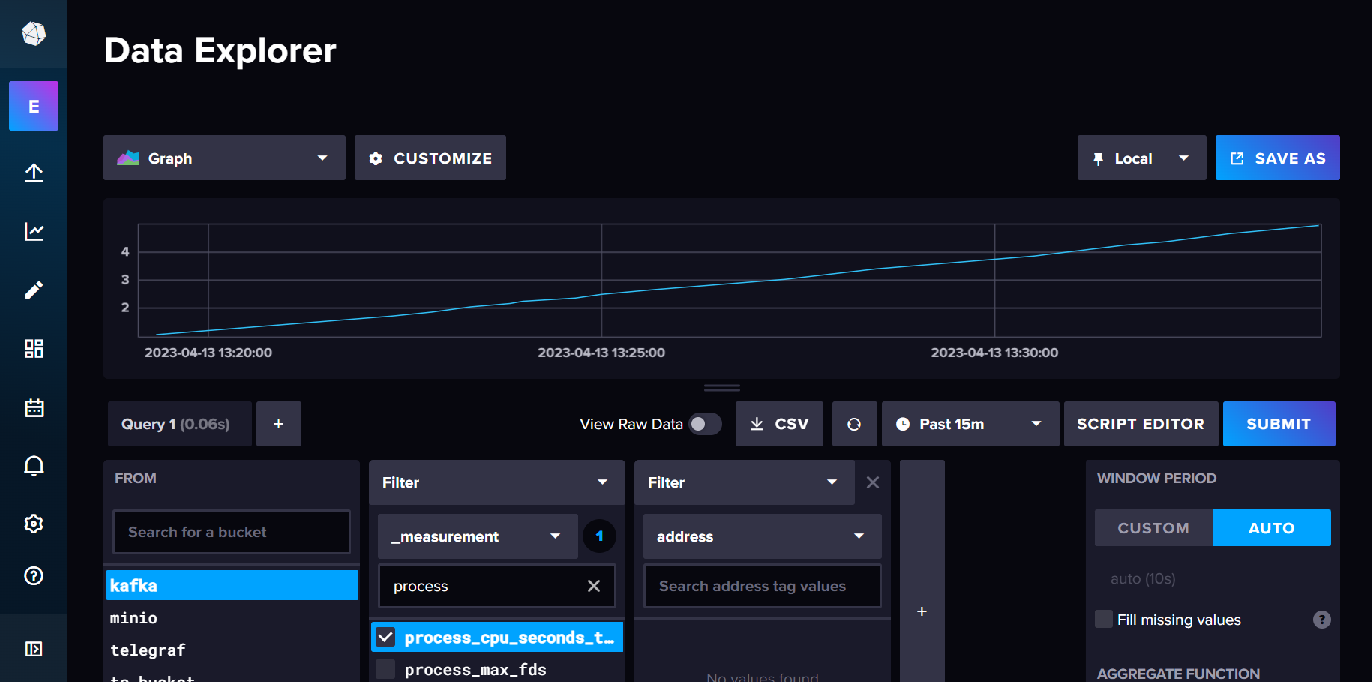

InfluxDB

To store these metrics in InfluxDB you need to do is setup up a bucket and a metrics scraper for the kafka-exporter metrics endpoint.

You can then view the metrics in data explorer.

Links

Grafana Dashboard for Kafka Exporter