NONRTRIC-634 - Getting issue details... STATUS

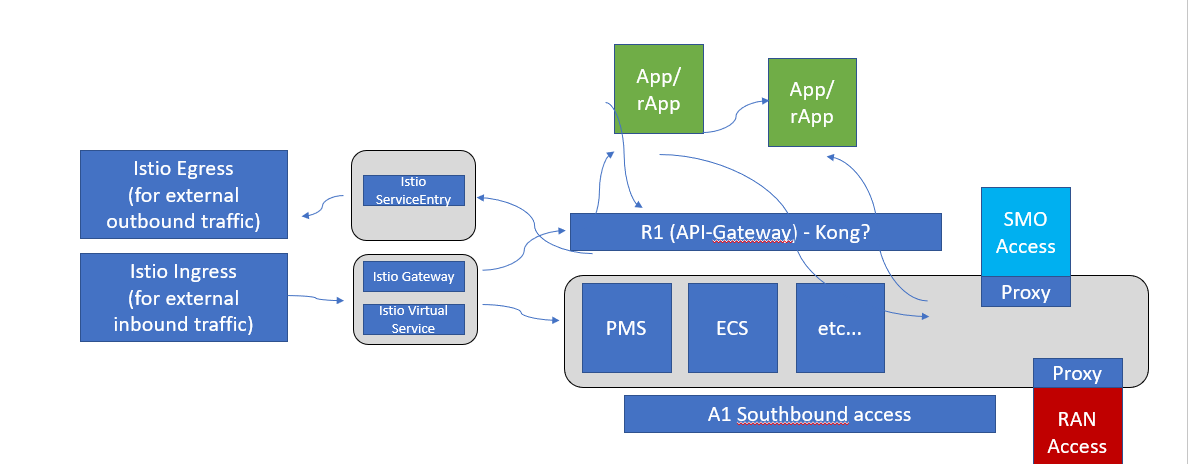

ISTIO

Istio is a service mesh which provides a dedicated infrastructure layer that you can add to your applications. It adds capabilities like observability, traffic management, and security, without adding them to your own code.

To populate its own service registry, Istio connects to a service discovery system. For example, if you’ve installed Istio on a Kubernetes cluster, then Istio automatically detects the services and endpoints in that cluster. Using this service registry, the Envoy proxies can then direct traffic to the relevant services.

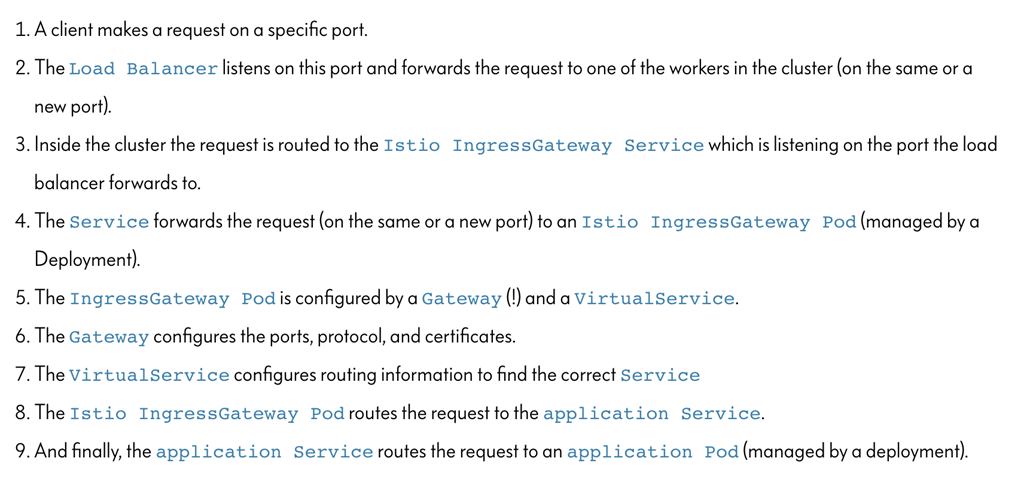

Istio Ingress Gateway can be used as a API-Gateway to securely expose the APIs of your micro services. It can be easily configured to provide access control for the APIs i.e. allowing you to apply policies defining who can access the APIs, what operations they are allowed to perform and much more conditions.

The Istio API traffic management features available are: Virtual services: Configure request routing to services within the service mesh. Each virtual service can contain a series of routing rules, that are evaluated in order. Destination rules: Configures the destination of routing rules within a virtual service.

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: bookinfo-ratings

spec:

host: ratings.prod.svc.cluster.local

trafficPolicy:

loadBalancer:

simple: LEAST_CONN

subsets:

- name: testversion

labels:

version: v3

trafficPolicy:

loadBalancer:

simple: ROUND_ROBIN

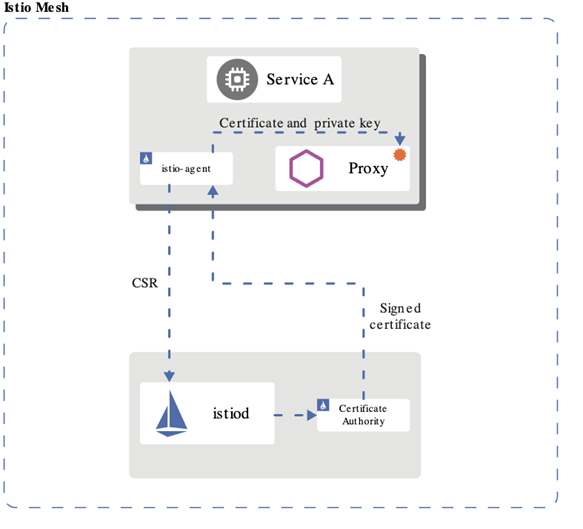

Istio provisions the DNS names and secret names for the DNS certificates based on configuration you provide. The DNS certificates provisioned are signed by the Kubernetes CA and stored in the secrets following your configuration. Istio also manages the lifecycle of the DNS certificates, including their rotations and regenerations.

With Mutual TLS (mTLS) the client and server both verify each other’s certificates and use them to encrypt traffic using TLS.. With Istio, you can enforce mutual TLS automatically.

apiVersion: "security.istio.io/v1beta1"

kind: "PeerAuthentication"

metadata:

name: "default"

namespace: "istio-system"

spec:

mtls:

mode: STRICT

JWT

JSON Web Token (JWT) is an open standard (RFC 7519) that defines a compact and self-contained way for securely transmitting information between parties as a JSON object.

JWT is mostly used for Authorization. Once the user is logged in, each subsequent request will include the JWT, allowing the user to access routes, services, and resources that are permitted with that token (User context). You can pass the original jwt as an embedded jwt or pass original jwt in the http header (Istio / JWTRule).

JWT can also contain information about the client that sent the request (client context).

We can use Istio's RequestAuthentication resource to configure JWT policies for your services.

apiVersion: security.istio.io/v1beta1

kind: RequestAuthentication

metadata:

name: httpbin

namespace: foo

spec:

selector:

matchLabels:

app: httpbin

jwtRules:

- issuer: "issuer-foo"

jwksUri: https://example.com/.well-known/jwks.json

---

apiVersion: security.istio.io/v1beta1

kind: AuthorizationPolicy

metadata:

name: httpbin

namespace: foo

spec:

selector:

matchLabels:

app: httpbin

rules:

- from:

- source:

requestPrincipals: ["*"]

JWT can also be used for service level authorization (SLA)

apiVersion: security.istio.io/v1beta1

kind: AuthorizationPolicy

metadata:

name: httpbin

namespace: foo

spec:

selector:

matchLabels:

app: httpbin

rules:

- from:

- source:

requestPrincipals: ["*"]

- to:

- operation:

paths: ["/healthz"]

Use Case

KUBERNETES

K8S RBAC (Role Based Access Control) - supported by default in kubernetes.

K8S Service Account

- Kube use service account (sa) to validate api access

- SAs can be lined to a role via a binding

- A default sa, with no permissions (no bindings), is created in each namespace – pods uses this namespace unless otherwise specified

- Pods can be specified to run with a specific sa

- The helm manger needs a SA with cluster wide permission (to be able to list installed charts etc)

However, during installation the pod running the helm install/upgrade/delete should run with a sa with only namespace permission to ensure not other modification is made to kube objects outside the namespace

K8S RBAC - controlling access to kubernetes resources

- Role and and rolebinding objects defines who (sa, user or group) is allowed to do what in the kubernetes api

- Role object

- Sets permissions on resources in a specific namespace

- ClusterRole object

- Sets permission on non-namespaced resources or across namespaces

- RoleBinding object

- Binds one or more Role or a ClusterRole object(s) to a user, group or service account

- ClusterRoleBinding object

- Binds one or more ClusterRole object(s) to a user, group or service account

- No installation requried – RBAC enabled by default

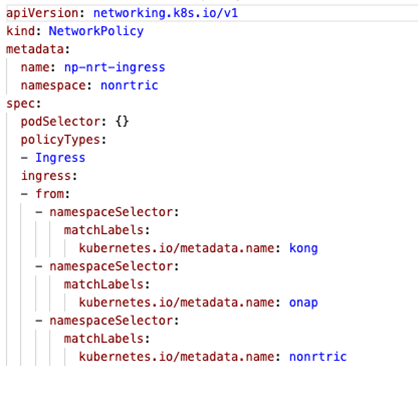

Network Policies

- K8S supports Network Policy objects but a provider need to be install for the polcies to take effect, e.g. Calico

- Network policies can control ingress and/or egress traffic by selecting applicable pods - bacially controlling traffic between pods and/or network endpoints

- Several providers: Calico, Cillium etc

- Allow traffic from ns: kong (the gateway), nonrtric and onap

- Deny traffic from any other ns

KONG

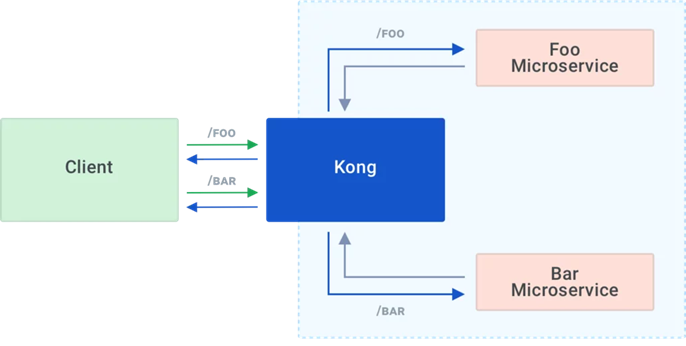

Kong is Orchestration Microservice API Gateway. Kong provides a flexible abstraction layer that securely manages communication between clients and microservices via API. Also known as an API Gateway, API middleware or in some cases Service Mesh.

- Kong can be used as an API gateway:

- Hiding internal microservice structure

- Could be used as R1 API front-end

Kong acts as the service registry, keeping a record of the available target instances for the upstream services. When a target comes online, it must register itself with Kong by sending a request to the Admin API. Each upstream service has its own ring balancer to distribute requests to the available targets.

With client-side discovery, the client or API gateway making the request is responsible for identifying the location of the service instance and routing the request to it. The client begins by querying the service registry to identify the location of the available instances of the service and then determines which instance to use. See https://konghq.com/learning-center/microservices/service-discovery-in-a-microservices-architecture/

Kong datastore

Kong uses an external datastore to store its configuration such as registered APIs, Consumers and Plugins. Plugins themselves can store every bit of information they need to be persisted, for example rate-limiting data or Consumer credentials. See https://konghq.com/faqs/#:~:text=PostgreSQL%20is%20an%20established%20SQL%20database%20for%20use,Cassandra%20or%20PostgreSQL%2C%20Kong%20maintains%20its%20own%20cache.

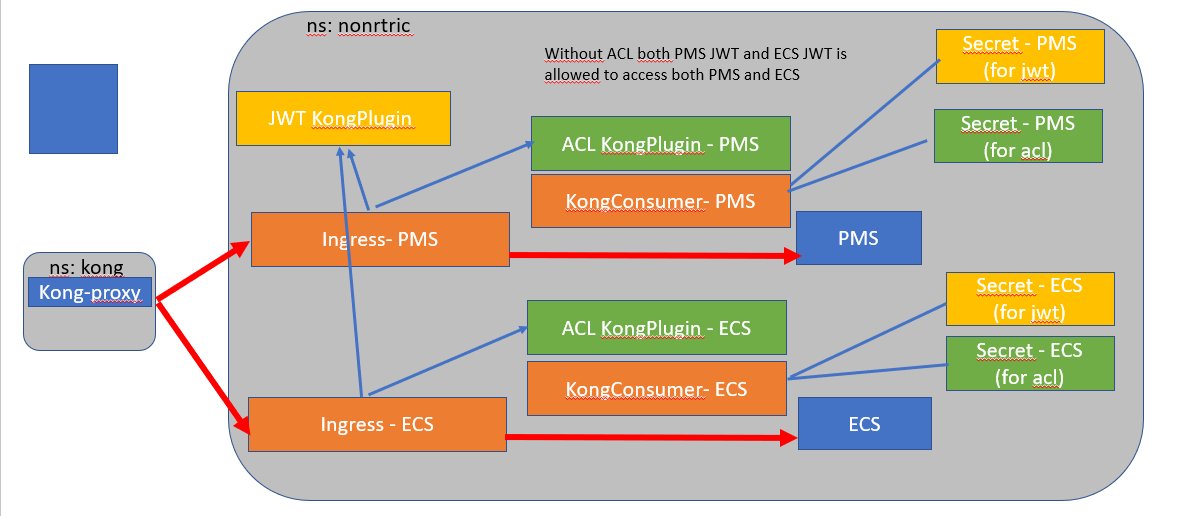

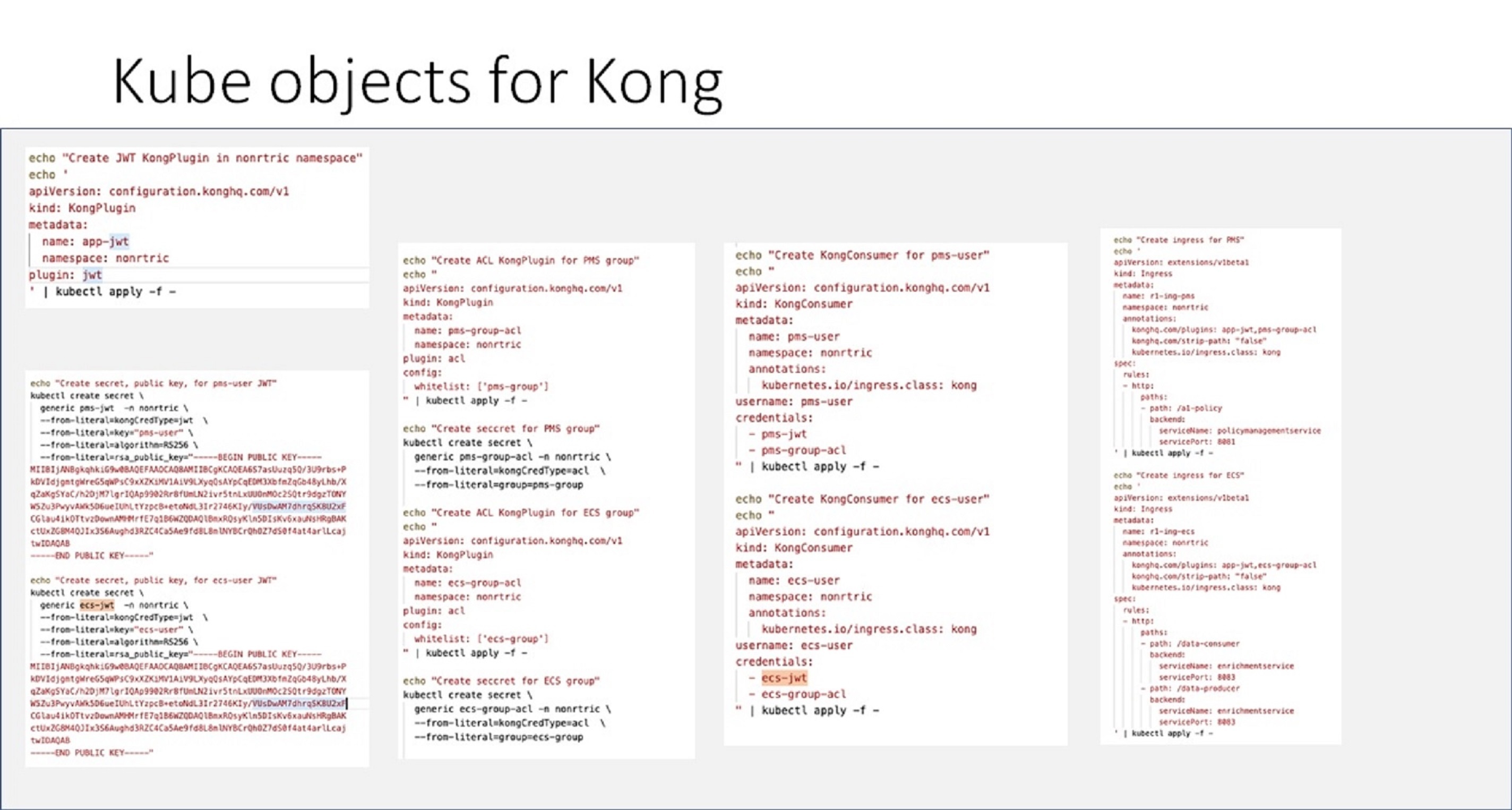

Kong Demo

Demo of Kong gateway access control already available.

JWT tokens are used to grant access to particular services for different users.

See also https://konghq.com/blog/jwt-kong-gateway

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: networkpolicy-nr

namespace: nonrtric

spec:

podSelector: {}

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: kong

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: onap

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: nonrtric

apiVersion: configuration.konghq.com/v1

kind: KongPlugin

metadata:

name: app-jwt-kp

namespace: nonrtric

plugin: jwt

---

apiVersion: v1

kind: Secret

metadata:

name: pms-jwt-sec

namespace: nonrtric

type: Opaque

stringData:

kongCredType: jwt

key: pms-issuer

algorithm: RS256

rsa_public_key: |

-----BEGIN PUBLIC KEY-----

MIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEAwetu4+suoz6c7e1kQz7I

Jmujci8zHpp4qh3nsmEL8e3QOKzMVsLuQPcF8lO1bBoChSA+KMNJ5rEixGWSxClp

9XroBSgrvjDsKtpPIlBQMnyOUYRSXWnIodmN+7wA72pTxo7JtAypPzRscSgi0OZt

9dtmv50RLr9Wph5cI+IE9OtgW58OKtdFRGigGHfdUEwrT/MPw2rOU85YRFaEgT/i

wcuQCe+Zmf2S2gVgK62u51ZFFn2VycJT1LcOt9cdqrSXYZAPfVKnQ/EgYvDdzFL1

x73JkrrSEP3pfrN4bXOnc7cS/S9Y2qk/I+QCR6a6XKmqk5SnWJSyXvKdYQJrgxJp

lQIDAQAB

-----END PUBLIC KEY-----

---

apiVersion: v1

kind: Secret

metadata:

name: ecs-jwt-sec

namespace: nonrtric

type: Opaque

stringData:

kongCredType: jwt

key: ecs-issuer

algorithm: RS256

rsa_public_key: |

-----BEGIN PUBLIC KEY-----

MIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEAtminzTtNs5oqPCbg4uC1

L7MfR3B+uyYvkSKr3NFieRCxp6VhrgodJJXYc3SqXbaTVBkTwU24wG4UvJCnoRQd

0VhSawtLkN8XNAdCiD831dKUYMJPs43ZY/gO5CHVqUMdSHlp8dn7jNren59dvRRS

3xC1D3etXuEU01XGuLi/5qJLAKqDbYs3bH1vslTjndg1WTsrkU8GEIT1NphSYg25

s6rSLTIBfk8FjKquYHw3wYVSQK9rg2mqddJpRWkfZnazMHTmSNjOJpiNb77VLGSx

9qDbbLjurCl2mAG5Z+w76uKfKGgOo68SU0TL1sPybsKhAoZZg1gF06mvMln5eq5C

RQIDAQAB

-----END PUBLIC KEY-----

---

apiVersion: configuration.konghq.com/v1

kind: KongPlugin

metadata:

name: pms-group-acl-kp

namespace: nonrtric

plugin: acl

config:

whitelist: ['pms-group']

---

apiVersion: v1

kind: Secret

metadata:

name: pms-group-acl-sec

namespace: nonrtric

type: Opaque

stringData:

kongCredType: acl

group: pms-group

---

apiVersion: configuration.konghq.com/v1

kind: KongPlugin

metadata:

name: ecs-group-acl-kp

namespace: nonrtric

plugin: acl

config:

whitelist: ['ecs-group']

---

apiVersion: v1

kind: Secret

metadata:

name: ecs-group-acl-sec

namespace: nonrtric

type: Opaque

stringData:

kongCredType: acl

group: ecs-group

---

apiVersion: configuration.konghq.com/v1

kind: KongPlugin

metadata:

name: all-group-acl-kp

namespace: nonrtric

plugin: acl

config:

whitelist: ['ecs-group', 'pms-group']

---

apiVersion: configuration.konghq.com/v1

kind: KongConsumer

metadata:

name: pms-user-kc

namespace: nonrtric

annotations:

kubernetes.io/ingress.class: kong

username: pms-user

credentials:

- pms-jwt-sec

- pms-group-acl-sec

---

apiVersion: configuration.konghq.com/v1

kind: KongConsumer

metadata:

name: ecs-user-kc

namespace: nonrtric

annotations:

kubernetes.io/ingress.class: kong

username: ecs-user

credentials:

- ecs-jwt-sec

- ecs-group-acl-sec

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: r1-pms-ing

namespace: nonrtric

annotations:

konghq.com/plugins: app-jwt-kp,pms-group-acl-kp

konghq.com/strip-path: "false"

spec:

ingressClassName: kong

rules:

- http:

paths:

- path: /a1-policy

pathType: ImplementationSpecific

backend:

service:

name: policymanagementservice

port:

number: 8081

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: r1-ecs-ing

namespace: nonrtric

annotations:

konghq.com/plugins: app-jwt-kp,ecs-group-acl-kp

konghq.com/strip-path: "false"

spec:

ingressClassName: kong

rules:

- http:

paths:

- path: /data-consumer

pathType: ImplementationSpecific

backend:

service:

name: enrichmentservice

port:

number: 8083

- path: /data-producer

pathType: ImplementationSpecific

backend:

service:

name: enrichmentservice

port:

number: 8083

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: r1-echo-ing

namespace: nonrtric

annotations:

konghq.com/plugins: app-jwt-kp,all-group-acl-kp

konghq.com/strip-path: "true"

spec:

ingressClassName: kong

rules:

- http:

paths:

- path: /echo

pathType: ImplementationSpecific

backend:

service:

name: httpecho

port:

number: 80

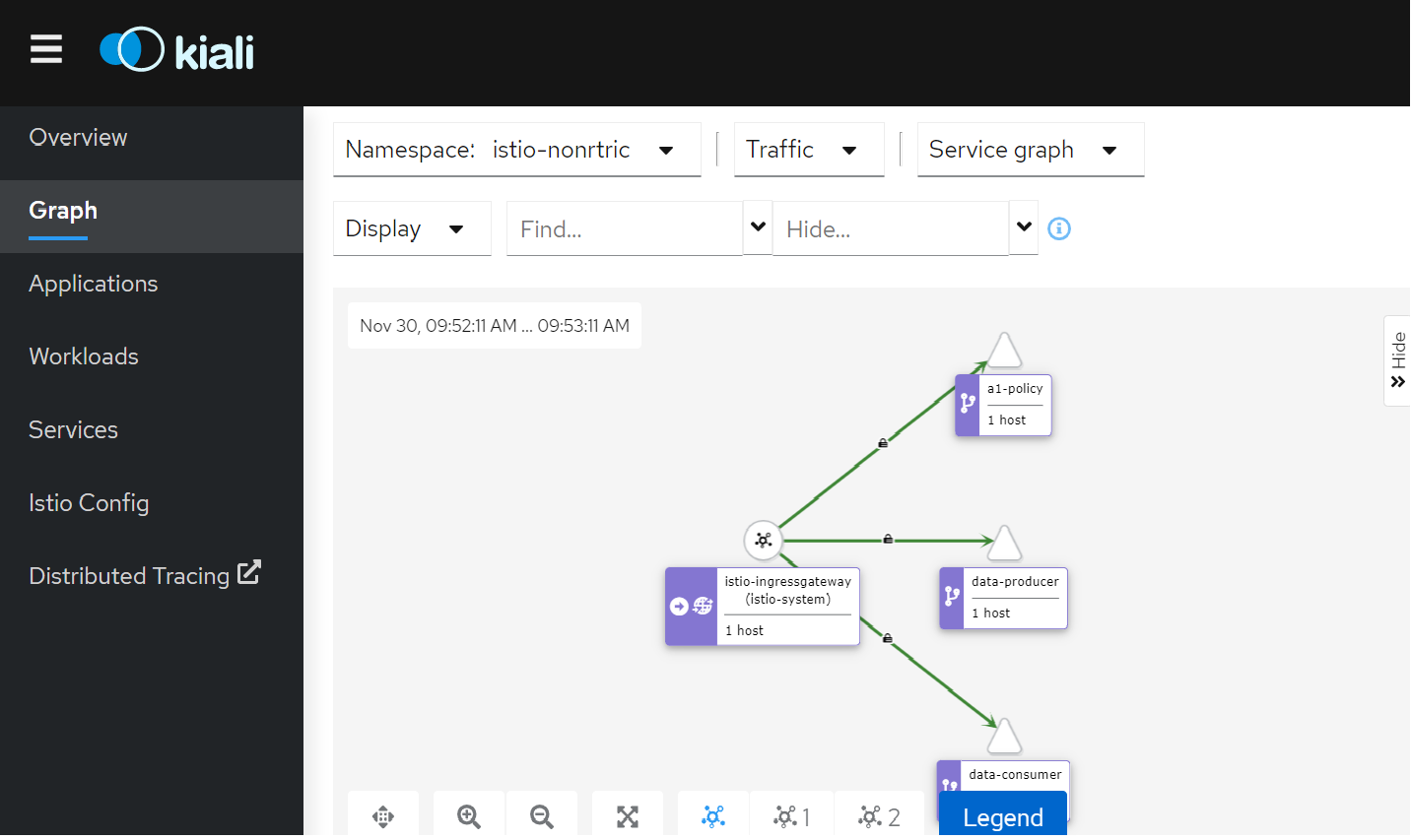

ISTIO Demo

- Install ISTIO on minikube using instruction here: Istio Installation - Simplified Learning (waytoeasylearn.com)

cd to the istio directory and install the demo application

- kubectl create ns foo

- kubectl apply -f <(istioctl kube-inject -f samples/httpbin/httpbin.yaml) -n foo

Create a python script to generate a JWT token using the code from here: https://medium.com/intelligentmachines/istio-jwt-step-by-step-guide-for-micro-services-authentication-690b170348fc . Install python_jwt using pip if it's not already installed.

- Create jwt-example.yaml using the public key generated by the python script: kubectl create

f jwtexample.yaml

apiVersion: "security.istio.io/v1beta1"

kind: "RequestAuthentication"

metadata:

name: "jwt-example"

namespace: istio-system

spec:

selector:

matchLabels:

istio: ingressgateway

jwtRules:

- issuer: "ISSUER"

jwks: |

{ "keys":[{"e":"AQAB","kty":"RSA","n":"x_yYl4uW5c6NHOA-bDDh0MThFggBWl-vYJr77b9F1LmAtTlJVM0rL5klTfv2DmlAmD9eZPrWeUOoOGhSpe58XiSAvxyeaOrZhtyUjT3aglrSys0YBsB19ItNGMuoIuzPpWOrdtKwHa9rPbrdc6q7vb93qu2UVaIz-3FJmGFtSA5t8FK_5bZKF-oOzRLwqeVQ3n0Bu_dFDuGeZjQWMZF32QupyA-GF-tDGGriPLy9sutlB1NQyZ4qiSZx5UMxcfLwsWfQxHemdwLeZXWKWNBov8RmbZy2Jz-dwg6XjHBWAjTnCGG9p-bp63nUlnELI3LcEGhGOugZBqcpNT5dEAQ0fQ"}]}

5. export the JWT token generated by the python script as an environment variable - export TOKEN="eyJhbGciOiJSUzI1NiIsInR5cCI6IkpXVCJ9.eyJhdWQiOiJBVURJRU5DRSIsImV4cCI6MTYzNzI1NDkxNSwiaWF0IjoxNjM3MjUxOTE1LCJpc3MiOiJJU1NVRVIiLCJqdGkiOiJCcmhDdEstcC00ZTF0RlBrZmpuSmhRIiwibmJmIjoxNjM3MjUxOTE1LCJwZXJtaXNzaW9uIjoicmVhZCIsInJvbGUiOiJ1c2VyIiwic3ViIjoiU1VCSkVDVCJ9.HrQCLPZXf0VkFe7JUVGXq-sHJQhVibqhToG4r63py-iwHWlUL02_WfoWRoxapgqGwImDdSlt1uG8RR-6VMqzWwGlcqBIRhFTG0nmzmtQjnOUs6QAKSUpA3PyWBIYHV0BwZbpo8Zq1Bo-sELy400fU-MCQ_054fSsG7JMBMmrnj8NyJmD2lNN0VSFGO53SPl2tQSVlc9OwAr8Uu0jfLPfUmh6yq43qFuxnVRfBGLLPNOt29aOfAetKLc72qlphtnbDx2a9teP5AIbkIWyIlhTytEnQRCwU4x8gDrEdkrHui4qCtzpl_uoITSwPe3AFsi7gQHB6rJoDj-j2zPc4rUTAA"

6. export INGRESS_HOST=$(minikube ip)

7. export INGRESS_PORT=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.spec.ports[?(@.name=="http2")].nodePort}')

8. Test the service: curl --header "Authorization: Bearer $TOKEN" $INGRESS_HOST:$INGRESS_PORT/headers -s -o /dev/null -w "%{http_code}\n"

9. You should get a response code of 200

10. Update the token to something invalid

11 The respose will be 401

Istio Service JWT Test

istio-test.yaml (uses the default namespace)

export TOKEN="eyJhbGciOiJSUzI1NiIsInR5cCI6IkpXVCJ9.eyJhdWQiOiJBVURJRU5DRSIsImV4cCI6MTYzODA4ODYzOSwiaWF0IjoxNjM3NjU2NjM5LCJpc3MiOiJJU1NVRVIiLCJqdGkiOiJXMW1ldEJISTlQWnJLZGZuTG54V0ZBIiwibmJmIjoxNjM3NjU2NjM5LCJwZXJtaXNzaW9uIjoicmVhZCIsInJvbGUiOiJ1c2VyIiwic3ViIjoiU1VCSkVDVCJ9.C8zVi4XpqaK-VVhDviCGO5SChNsUWe_WmP2Z-JXkM3VzMVQnc2w7ResUI-g8DxXQLXojc7BZiDA74VCRRzdSXtDrBbikd9riCN1D9UVXWaCdIv0gU9b23mOp2jUP7G8FgdKTjtcyx3pPmliHH1OnDhrsQUeTMezRurBa96sRf_9XF5B_SBXiTy65UhqzL-kKmbaTCXWO6F5d4mJ8gPJQ4BGQdl1CMfytg0RB1Tuyj72dDTetfWMStqRw0nEh76oAC5bDZAUhwpAUMbXTG0Iba9MSAhImha6grthU1_VY39LbmbZ7W7OfRV1mAI9PrDl0nwWWobvJ-iIg93luqvGrlA"

kubectl label namespace default istio-injection=enabled --overwrite

export INGRESS_HOST=$(minikube ip)

export INGRESS_PORT=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.spec.ports[?(@.name=="http2")].nodePort}')

curl --header "Authorization: Bearer $TOKEN" $INGRESS_HOST:$INGRESS_PORT/data-producer

curl --header "Authorization: Bearer $TOKEN" $INGRESS_HOST:$INGRESS_PORT/a1-policy

curl --header "Authorization: Bearer $TOKEN" $INGRESS_HOST:$INGRESS_PORT/data-consumer

You can use different tokens for different deployments.

Currently we use the same token for both jwt-ecs and jwt-pms. They match on the deployment labels nonrtric-ecs and nonrtric-pms.

You can also change the token issuer to one for your particular service(s).

You can also change the expiry time.

Please refer to the python script in the link above.

You can move these objects to their own namespace if you prefer:

e.g.

kubectl create ns istio-nonrtric

namespace/istio-nonrtric created

kubectl label namespace istio-nonrtric istio-injection=enabled --overwrite

namespace/istio-nonrtric labeled

replace the default name space in the yaml above with the new ns name.

You can also update the AuthorizationPolicy to check the JWT issuer/subject

e.g.

rules:

- from:

- source:

requestPrincipals: ["ECSISSUER/SUBJECT"]

See the latest version here: istio-test-latest.yaml

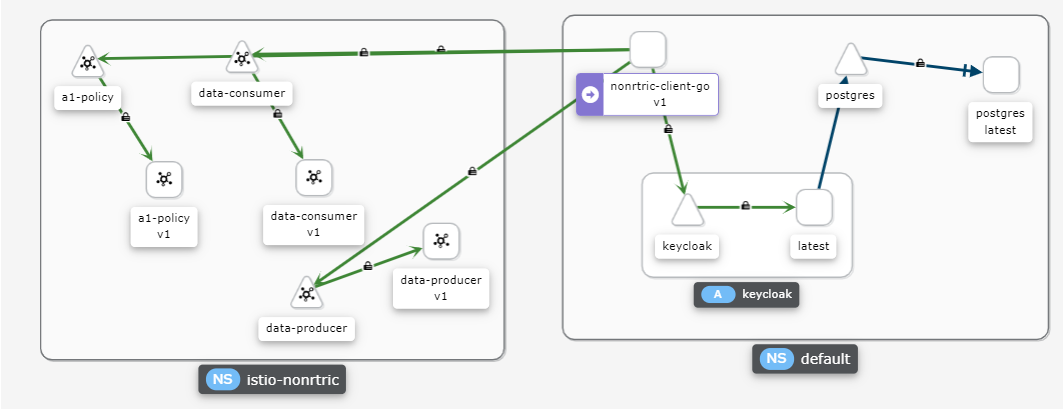

Istio with Keycloak

If you are using minikube on Ubuntu WSL you need to run "minikube service keycloak" to see keycloak ui.

Run the following command to get the keycloak URLs:

KEYCLOAK_URL=http://$(minikube ip):$(kubectl get services/keycloak -o go-template='{{(index .spec.ports 0).nodePort}}')/auth &&

o "" &

echo "" &&

echo "Keycloak: $KEYCLOAK_URL" &&

echo "Keycloak Admin Console: $KEYCLOAK_URL/admin" &&

echo "Keycloak Account Console: $KEYCLOAK_URL/realms/myrealm/account" &&

echo ""

Retrieve public key using : http(s)://<hostname>/auth/realms/<realm name>

Enable keycloak with Istio

Setup a new realm, user and client as shown here : https://www.keycloak.org/getting-started/getting-started-kube

Note the id of the new user, this will be used as the sub field in the token e.g. 81b2051b-52d9-4e4e-88a6-00ca04b7b73d"

The iss field is url of the realm e.g. http://192.168.49.2:30869/auth/realms/myrealm

Edit the jwt-pms RequestAuthentication definition above, replace the issuer with the keycloak iss and remove the jwks field and replace it with the jwksUri pointing to your keycloak certs

apiVersion: security.istio.io/v1beta1

kind: RequestAuthentication

metadata:

name: "jwt-pms"

namespace: istio-nonrtric

spec:

selector:

matchLabels:

apptype: nonrtric-pms

jwtRules:

- issuer: "http://192.168.49.2:30869/auth/realms/myrealm"

jwksUri: "http://192.168.49.2:30869/auth/realms/myrealm/protocol/openid-connect/certs"

Modify the AuthorizationPolicy named pms-policy, change the issuer and subject to the keycloak iss/sub

apiVersion: "security.istio.io/v1beta1"

kind: "AuthorizationPolicy"

metadata:

name: "pms-policy"

namespace: istio-nonrtric

spec:

selector:

matchLabels:

apptype: nonrtric-pms

action: ALLOW

rules:

- from:

- source:

requestPrincipals: ["http://192.168.49.2:30869/auth/realms/myrealm/81b2051b-52d9-4e4e-88a6-00ca04b7b73d"]

Reapply the yaml file

to generate a token use the following command:

curl -X POST "$KEYCLOAK_URL" \

-H "Content-Type: application/x-www-form-urlencoded" \

-d "username=$USERNAME" \

-d "password=$PASSWORD" \

-d 'grant_type=password' \

-d "client_id=$CLIENT_ID" | jq -r '.access_token'

e.g.

curl -X POST http://192.168.49.2:30869/auth/realms/myrealm/protocol/openid-connect/token \

-H "Content-Type: application/x-www-form-urlencoded" \

-d "username=user" \

-d "password=secret" \

-d 'grant_type=password' \

-d "client_id=myclient" | jq -r '.access_token'

Note: you may need to install the jq utility on your system for this to work - sudo apt-get install jq

Test the a1-policy service with your new token

TOKEN=$(curl -X POST http://192.168.49.2:30869/auth/realms/myrealm/protocol/openid-connect/token -H "Content-Type: application/x-www-form-urlencoded" -d username=user -d password=secret -d 'grant_type=password' -d client_id=myclient | jq -r '.access_token')

curl --header "Authorization: Bearer $TOKEN" $INGRESS_HOST:$INGRESS_PORT/a1-policy

Hello a1-policy

Note: The iss of the token will differ depending on how you retrieve it. If it's retrieved from within the cluster for URL will start with http://keycloak.default:8080/ otherwise it will be something like : http://192.168.49.2:31560/ (http://(minikube ip): (keycloak service nodePort))

Keycloak database

Keycloak uses the H2 database by default.

To configure keycloak to use a different database follow these steps.

- Install either postgres or mariadb using these yaml files: postgres.yaml or mariadb.yaml. These will setup the keycloak db along with the username and password. You just need to change the directory for your persistent storage to an appropiate directory on your host.

Update the keycloak installation script https://raw.githubusercontent.com/keycloak/keycloak-quickstarts/latest/kubernetes-examples/keycloak.yaml

Keycloak Environmentenv: - name: KEYCLOAK_USER value: "admin" - name: KEYCLOAK_PASSWORD value: "admin" - name: PROXY_ADDRESS_FORWARDING value: "true" - name: DB_VENDOR value: "postgres" - name: DB_ADDR value: "postgres" - name: DB_PORT value: "5432" - name: DB_DATABASE value: "keycloak" - name: DB_USER value: "keycloak" - name : DB_PASSWORD value: "keycloak"

You can also add the following code block to make sure keycloak only start once the database is up and running

initContainers:

- name: init-postgres

image: busybox

command: ['sh', '-c', 'until nc -vz postgres 5432; do echo waiting for postgres db; sleep 2; done;']

Note: you may also want to update the keycloak service to specify a value for nodePort.

apiVersion: v1

kind: Service

metadata:

name: keycloak

labels:

app: keycloak

spec:

ports:

- name: http

port: 8080

targetPort: 8080

nodePort: 31560

selector:

app: keycloak

type: LoadBalancer

You can also add a wait for keycloak to your deployment containers

spec:

initContainers:

- name: init-keycloak

image: busybox

command: ['sh', '-c', 'until nc -vz keycloak.default 8080; do echo waiting for keycloak; sleep 2; done;']

containers:

- name: a1-policy

image: hashicorp/http-echo

ports:

- containerPort: 5678

args:

- -text

- "Hello a1-policy"

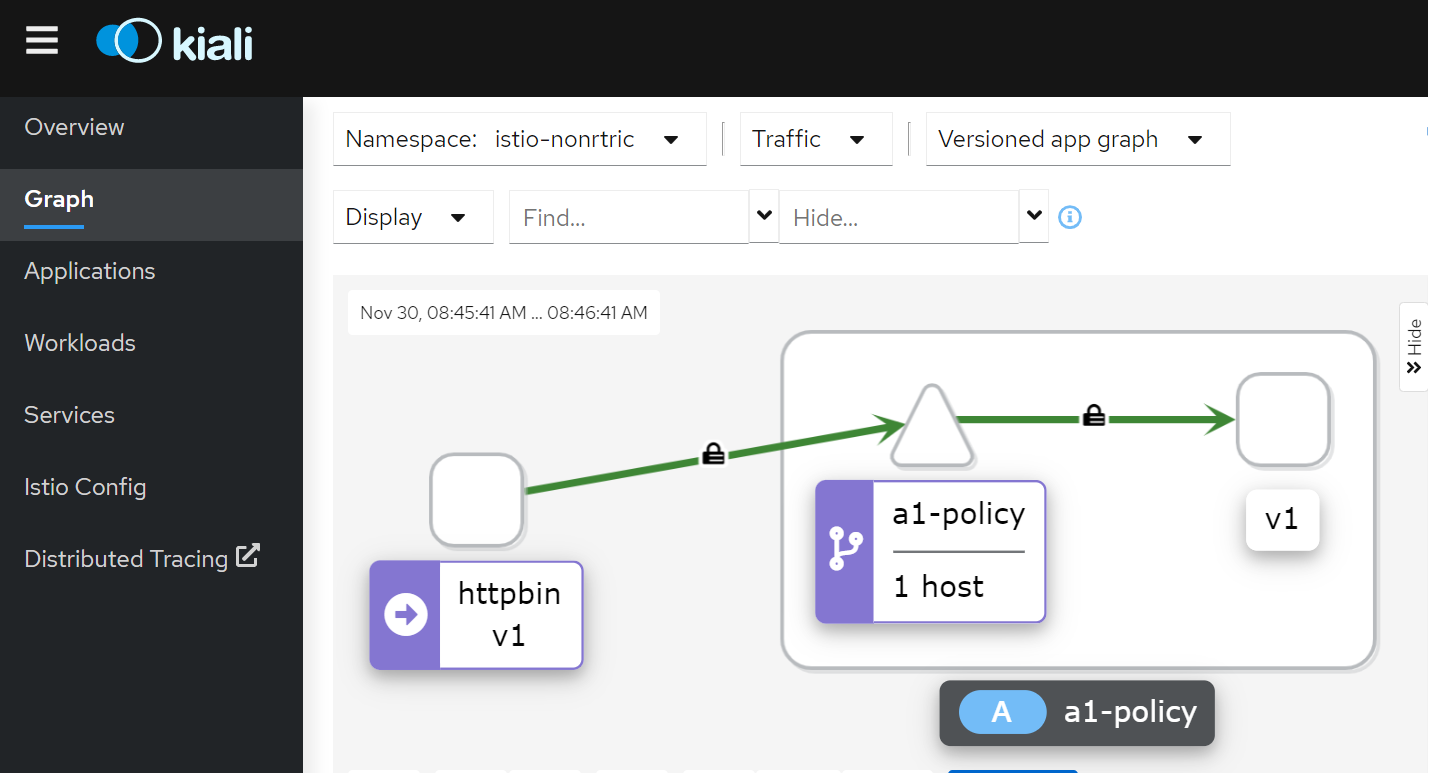

Istio mTLS

Test: Istio / Mutual TLS Migration

To see mTLS in kiali go to the display menu and check the security check box.

Running curl --header "Authorization: Bearer $TOKEN" ai-policy from the httpbin pod.

The padlock icon indicates mTLS is being used for communication between the pods.

Policy to enforce mTLS between PODs in the istio-nonrtric namespace in STRICT mode:

apiVersion: security.istio.io/v1beta1

kind: PeerAuthentication

metadata:

name: "default"

namespace: istio-nonrtric

spec:

mtls:

mode: STRICT

Change the mode to PERMISSIVE to allow communication from pods without istio sidecar.

Change namespace to istio-system to apply mTLS for the entire cluster.

Istio cert manager

https://istio.io/latest/docs/ops/integrations/certmanager/

Go Http Request Handler for Testing

nonrtric-server-go

package main

import (

"fmt"

"log"

"github.com/gorilla/mux"

"net/http"

"encoding/json"

"io/ioutil"

"strings"

)

func requestHandler(w http.ResponseWriter, r *http.Request) {

w.Header().Set("Content-Type", "application/json")

params := mux.Vars(r)

var id = params["id"]

var data = params["data"]

var prefix = strings.Split(r.URL.Path, "/")[1]

switch r.Method {

case "GET":

if id == "" {

fmt.Println( "Received get request for "+ prefix +", params: nil\n")

fmt.Fprintf(w, "Response to get request for "+ prefix +", params: nil\n")

}else {

fmt.Println("Received get request for "+ prefix +", params: id=" + id + "\n")

fmt.Fprintf(w, "Response to get request for "+ prefix +", params: id=" + id + "\n")

}

case "POST":

body, err := ioutil.ReadAll(r.Body)

if err != nil {

panic(err.Error())

}

keyVal := make(map[string]string)

json.Unmarshal(body, &keyVal)

id := keyVal["id"]

data := keyVal["data"]

fmt.Println("Received post request for "+ prefix +", params: id=" + id +", data=" + data + "\n")

fmt.Fprintf(w, "Response to post request for "+ prefix +", params: id=" + id +", data=" + data + "\n")

case "PUT":

fmt.Println("Received put request for "+ prefix +", params: id=" + id +", data=" + data + "\n")

fmt.Fprintf(w, "Response to put request for "+ prefix +", params: id=" + id +", data=" + data + "\n")

case "DELETE":

fmt.Println("Received delete request for "+ prefix +", params: id=" + id + "\n")

fmt.Fprintf(w, "Response to delete request for "+ prefix +", params: id=" + id + "\n")

default:

fmt.Println("Received request for unsupported method, only GET, POST, PUT and DELETE methods are supported.")

fmt.Fprintf(w, "Error, only GET, POST, PUT and DELETE methods are supported.")

}

}

func main() {

router := mux.NewRouter()

var prefixArray [3]string = [3]string{"/a1-policy", "/data-consumer", "/data-producer"}

for _, prefix := range prefixArray {

router.HandleFunc(prefix, requestHandler)

router.HandleFunc(prefix+"/{id}", requestHandler)

router.HandleFunc(prefix+"/{id}/{data}", requestHandler)

}

log.Fatal(http.ListenAndServe(":8080", router))

}

FROM golang:1.15.2-alpine3.12 as build RUN apk add git RUN mkdir /build ADD . /build WORKDIR /build RUN go get github.com/gorilla/mux RUN go build -o nonrtric-server-go . FROM alpine:latest RUN mkdir /app WORKDIR /app/ # Copy the Pre-built binary file from the previous stage COPY --from=build /build . # Expose port 8080 EXPOSE 8080 # Run Executable CMD ["/app/nonrtric-server-go"]

Testing

Update AuthorizationPolicy to only allow certain operations:

apiVersion: "security.istio.io/v1beta1"

kind: "AuthorizationPolicy"

metadata:

name: "pms-policy"

namespace: istio-nonrtric

spec:

selector:

matchLabels:

apptype: nonrtric-pms

action: ALLOW

rules:

- from:

- source:

requestPrincipals: ["http://192.168.49.2:31560/auth/realms/pmsrealm/fab53fd0-3315-4e2f-bd17-6984fb7745f2"]

to:

- operation:

methods: ["GET", "POST", "PUT"]

paths: ["/a1-policy*"]

apiVersion: "security.istio.io/v1beta1"

kind: "AuthorizationPolicy"

metadata:

name: "ics-policy"

namespace: istio-nonrtric

spec:

selector:

matchLabels:

apptype: nonrtric-ics

action: ALLOW

rules:

- from:

- source:

requestPrincipals: ["http://192.168.49.2:31560/auth/realms/icsrealm/ad83e4ea-c114-4549-be29-b3aaf92148a5"]

to:

- operation:

methods: ["GET", "PUT", "DELETE"]

paths: ["/data-*"]

Shell script to test AuthorizationPolicy

#!/bin/bash

INGRESS_HOST=$(minikube ip)

INGRESS_PORT=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.spec.ports[?(@.name=="http2")].nodePort}')

TESTS=0

PASSED=0

FAILED=0

TEST_TS=$(date +%F-%T)

TOKEN=""

function get_token

{

local prefix="${1}"

url="http://192.168.49.2:31560/auth/realms"

if [[ "$prefix" =~ ^a1-policy* ]]; then

TOKEN=$(curl -s -X POST ${url}/pmsrealm/protocol/openid-connect/token -H \

"Content-Type: application/x-www-form-urlencoded" -d username=pmsuser -d password=secret \

-d 'grant_type=password' -d client_id=pmsclient | jq -r '.access_token')

else

TOKEN=$(curl -s -X POST $url/icsrealm/protocol/openid-connect/token -H \

"Content-Type: application/x-www-form-urlencoded" -d username=icsuser -d password=secret \

-d 'grant_type=password' -d client_id=icsclient | jq -r '.access_token')

fi

}

function run_test

{

local prefix="${1}" type=${2} msg="${3}" data=${4}

TESTS=$((TESTS+1))

echo "Test ${TESTS}: Testing $type /${prefix}"

get_token $prefix

url=$INGRESS_HOST:$INGRESS_PORT"/"$prefix

if [ "$data" != "" ]; then

result=$(curl -s -X ${type} -H "Content-type: application/json" -H "Authorization: Bearer $TOKEN" -d ${data} $url)

else

result=$(curl -s -X ${type} -H "Content-type: application/json" -H "Authorization: Bearer $TOKEN" $url)

fi

echo $result

if [ "$result" != "$msg" ]; then

echo "FAIL"

FAILED=$((FAILED+1))

else

echo "PASS"

PASSED=$((PASSED+1))

fi

echo ""

}

run_test "a1-policy" "GET" "Received get request for a1-policy, params: nil" ""

run_test "a1-policy/1001" "GET" "Received get request for a1-policy, params: id=1001" ""

run_test "a1-policy/1002/xyz" "PUT" "Received put request for a1-policy, params: id=1002, data=xyz" ""

run_test "a1-policy/1001" "DELETE" "RBAC: access denied" ""

run_test "a1-policy" "POST" "Received post request for a1-policy, params: id=1003, data=abc" '{"id":"1003","data":"abc"}'

run_test "data-consumer" "GET" "Received get request for data-consumer, params: nil" ""

run_test "data-consumer/3001" "DELETE" "Received delete request for data-consumer, params: id=3001" ""

run_test "data-producer/2001/xyz" "PUT" "Received put request for data-producer, params: id=2001, data=xyz" ""

run_test "data-consumer" "POST" "RBAC: access denied" '{"id":"1004","data":"abc"}'

run_test "data-producer" "POST" "RBAC: access denied" '{"id":"1005","data":"abc"}'

echo

echo "-----------------------------------------------------------------------"

echo "Number of Tests: $TESTS, Tests Passed: $PASSED, Tests Failed: $FAILED"

echo "Date: $TEST_TS"

echo "-----------------------------------------------------------------------"

Results:

./service_exposure_tests.sh

Test 1: Testing /a1-policy GET

Received get request for a1-policy, params: nil

PASS

Test 2: Testing /a1-policy/1001 GET

Received get request for a1-policy, params: id=1001

PASS

Test 3: Testing /a1-policy/1002/xyz PUT

Received put request for a1-policy, params: id=1002, data=xyz

PASS

Test 4: Testing /a1-policy/1001 DELETE

RBAC: access denied

PASS

Test 5: Testing /a1-policy POST

Received post request for a1-policy, params: id=1003, data=abc

PASS

Test 6: Testing /data-consumer GET

Received get request for data-consumer, params: nil

PASS

Test 7: Testing /data-consumer/3001 DELETE

Received delete request for data-consumer, params: id=3001

PASS

Test 8: Testing /data-producer/2001/xyz PUT

Received put request for data-producer, params: id=2001, data=xyz

PASS

Test 9: Testing /data-consumer POST

RBAC: access denied

PASS

Test 10: Testing /data-producer POST

RBAC: access denied

PASS

-----------------------------------------------------------------------

Number of Tests: 10, Tests Passed: 10, Tests Failed: 0

Date: 2021-12-06-14:48:33

-----------------------------------------------------------------------

Go Http Client for running inside cluster

nonrtric-client-go

package main

import (

"fmt"

"net/http"

"net/url"

"encoding/json"

"time"

"io/ioutil"

"math/rand"

"strings"

"bytes"

"strconv"

"flag"

)

type Jwttoken struct {

Access_token string

Expires_in int

Refresh_expires_in int

Refresh_token string

Token_type string

Not_before_policy int

Session_state string

Scope string

}

var gatewayHost string

var gatewayPort string

var keycloakHost string

var keycloakPort string

var useGateway string

var letters = []rune("abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ")

func randSeq(n int) string {

b := make([]rune, n)

for i := range b {

b[i] = letters[rand.Intn(len(letters))]

}

return string(b)

}

func getToken(user string, password string, clientId string, realmName string) string {

keycloakUrl := "http://"+keycloakHost+":"+keycloakPort+"/auth/realms/"+realmName+"/protocol/openid-connect/token"

resp, err := http.PostForm(keycloakUrl,

url.Values{"username": {user}, "password": {password}, "grant_type": {"password"}, "client_id": {clientId}})

if err != nil {

fmt.Println(err)

panic("Something wrong with the credentials or url ")

}

defer resp.Body.Close()

body, err := ioutil.ReadAll(resp.Body)

var jwt Jwttoken

json.Unmarshal([]byte(body), &jwt)

return jwt.Access_token;

}

func MakeRequest(client *http.Client, prefix string, method string, ch chan<-string) {

var id = rand.Intn(1000)

var data = randSeq(10)

var service = strings.Split(prefix, "/")[1]

var gatewayUrl = "http://"+gatewayHost+":"+gatewayPort

var token = ""

var jsonValue []byte = []byte{}

var restUrl string = ""

if strings.ToUpper(useGateway) != "Y" {

gatewayUrl = "http://"+service+".istio-nonrtric:80"

//fmt.Println(gatewayUrl)

}

if service == "a1-policy" {

token = getToken("pmsuser", "secret","pmsclient", "pmsrealm")

}else{

token = getToken("icsuser", "secret","icsclient", "icsrealm")

}

if method == "POST" {

values := map[string]string{"id": strconv.Itoa(id), "data": data}

jsonValue, _ = json.Marshal(values)

restUrl = gatewayUrl+prefix

} else if method == "PUT" {

restUrl = gatewayUrl+prefix+"/"+strconv.Itoa(id)+"/"+data

} else {

restUrl = gatewayUrl+prefix+"/"+strconv.Itoa(id)

}

req, err := http.NewRequest(method, restUrl, bytes.NewBuffer(jsonValue))

if err != nil {

fmt.Printf("Got error %s", err.Error())

}

req.Header.Set("Content-type", "application/json")

req.Header.Set("Authorization", "Bearer "+token)

resp, err := client.Do(req)

if err != nil {

fmt.Printf("Got error %s", err.Error())

}

defer resp.Body.Close()

body, _ := ioutil.ReadAll(resp.Body)

respString := string(body[:])

if respString == "RBAC: access denied"{

respString += " for "+service+" "+strings.ToLower(method)+" request\n"

}

ch <- fmt.Sprintf("%s", respString)

}

func main() {

flag.StringVar(&gatewayHost, "gatewayHost", "192.168.49.2", "Gateway Host")

flag.StringVar(&gatewayPort, "gatewayPort" , "32162", "Gateway Port")

flag.StringVar(&keycloakHost, "keycloakHost", "192.168.49.2", "Keycloak Host")

flag.StringVar(&keycloakPort, "keycloakPort" , "31560", "Keycloak Port")

flag.StringVar(&useGateway, "useGateway" , "Y", "Connect to services hrough API gateway")

flag.Parse()

client := &http.Client{

Timeout: time.Second * 10,

}

ch := make(chan string)

var prefixArray [3]string = [3]string{"/a1-policy", "/data-consumer", "/data-producer"}

var methodArray [4]string = [4]string{"GET", "POST", "PUT", "DELETE"}

for true {

for _,prefix := range prefixArray{

for _,method := range methodArray{

go MakeRequest(client, prefix, method, ch)

}

}

for i := 0; i < len(prefixArray); i++ {

for j := 0; j < len(methodArray); j++ {

fmt.Println(<-ch)

}

}

time.Sleep(30 * time.Second)

}

}

FROM golang:1.15.2-alpine3.12 as build

RUN apk add git

RUN mkdir /build

ADD . /build

WORKDIR /build

RUN go build -o nonrtric-client-go .

FROM alpine:latest

RUN mkdir /app

WORKDIR /app/

# Copy the Pre-built binary file from the previous stage

COPY --from=build /build .

# Expose port 8080

EXPOSE 8080

# Run Executable

ENTRYPOINT [ "/app/nonrtric-client-go", \

"-gatewayHost", "istio-ingressgateway.istio-system", \

"-gatewayPort", "80", \

"-keycloakHost", "keycloak.default", \

"-keycloakPort", "8080", \

"-useGateway", "N" ]

Update AuthorizationPolicy

apiVersion: "security.istio.io/v1beta1"

kind: "AuthorizationPolicy"

metadata:

name: "ics-policy"

namespace: istio-nonrtric

spec:

selector:

matchLabels:

apptype: nonrtric-ics

action: ALLOW

rules:

- from:

- source:

namespaces: ["default"]

to:

- operation:

methods: ["GET", "POST", "PUT", "DELETE"]

paths: ["/data-*"]

hosts: ["data-consumer*", "data-producer*"]

ports: ["8080"]

---

apiVersion: "security.istio.io/v1beta1"

kind: "AuthorizationPolicy"

metadata:

name: "pms-policy"

namespace: istio-nonrtric

spec:

selector:

matchLabels:

apptype: nonrtric-pms

action: ALLOW

rules:

- from:

- source:

principals: ["cluster.local/ns/default/sa/goclient"]

to:

- operation:

methods: ["GET", "POST", "PUT", "DELETE"]

paths: ["/a1-policy*"]

hosts: ["a1-policy*"]

ports: ["8080"]

when:

- key: request.auth.claims[preferred_username]

values: ["pmsuser"]

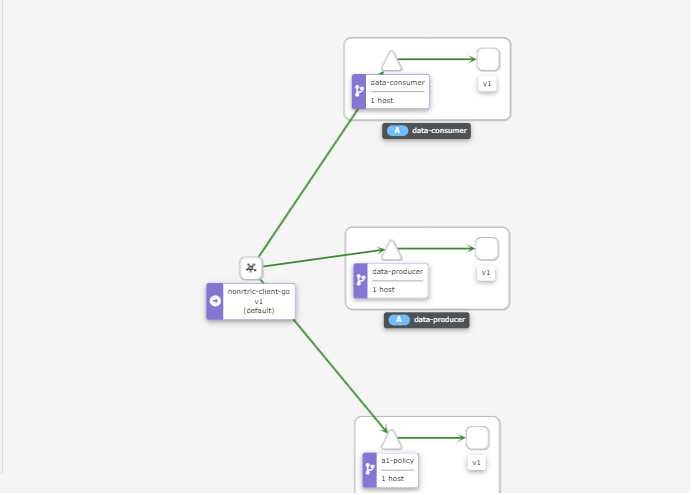

Request are sent from the nonrtric-client-go pod to the services directly from within the cluster.

In the above example I'm using principals and namespaces for authorization.

Both of these require MTLS to be enabled.

If istio is not enabled for the client you can inject the individual pod with the istio proxy : istioctl kube-inject -f client.yaml | kubectl apply -f -

I have also included a "when" condition which checks one of the JWT fields, in this case "preferred_username": "icsuser".

The hosts and ports fields refer to the destination host(s) and post(s).

To use JWT inside the cluster you need to update the RequestAuthentication policy to include the internal address for the jwksUri

jwtRules:

- issuer: "http://192.168.49.2:31560/auth/realms/pmsrealm"

jwksUri: "http://192.168.49.2:31560/auth/realms/pmsrealm/protocol/openid-connect/certs"

- issuer: "http://keycloak.default:8080/auth/realms/pmsrealm"

jwksUri: "http://keycloak.default:8080/auth/realms/pmsrealm/protocol/openid-connect/certs"

You also need to update the AuthorizationPolicy to include the internal source

rules:

- from:

- source:

requestPrincipals: ["http://192.168.49.2:31560/auth/realms/pmsrealm/fab53fd0-3315-4e2f-bd17-6984fb7745f2"]

- source:

requestPrincipals: ["http://keycloak.default:8080/auth/realms/pmsrealm/fab53fd0-3315-4e2f-bd17-6984fb7745f2"]

to:

- operation:

methods: ["GET", "POST", "PUT", "DELETE"]

paths: ["/a1-policy*"]

You can change the contents (fields) of your JWT token by using client mappers.

Create a new roles pms_admin

The assign the role to your user: role mapping(tab) →available roles →add selected

Then in you client select mappers → create → Mapper Type: User Realm Role, Token claim name : role

The following field will be added to your JWT:

"role": [

"pms_admin"

],

you can then use this in you when clause to alow/block access to cetain endpoints:

- key: request.auth.claims[role]

values: ["pms_admin"]

Create another role pms_viewer and assign it to a second user pmsuser2

We can then configure the AuthorizationPolicy to grant differnt access to different roles

apiVersion: "security.istio.io/v1beta1"

kind: "AuthorizationPolicy"

metadata:

name: "pms-policy"

namespace: istio-nonrtric

spec:

selector:

matchLabels:

apptype: nonrtric-pms

action: ALLOW

rules:

- from:

- source:

requestPrincipals: ["http://192.168.49.2:31560/auth/realms/pmsrealm/fab53fd0-3315-4e2f-bd17-6984fb7745f2"]

- source:

requestPrincipals: ["http://keycloak.default:8080/auth/realms/pmsrealm/fab53fd0-3315-4e2f-bd17-6984fb7745f2"]

to:

- operation:

methods: ["GET", "POST", "PUT", "DELETE"]

paths: ["/a1-policy*"]

when:

- key: request.auth.claims[role]

values: ["pms_admin"]

- from:

- source:

requestPrincipals: ["http://192.168.49.2:31560/auth/realms/pmsrealm/f96255ec-d553-4c2e-b106-0ed586ccab70"]

- source:

requestPrincipals: ["http://keycloak.default:8080/auth/realms/pmsrealm/f96255ec-d553-4c2e-b106-0ed586ccab70"]

to:

- operation:

methods: ["GET"]

paths: ["/a1-policy*"]

when:

- key: request.auth.claims[role]

values: ["pms_viewer"]

pms_admin role:

Test 1: Testing GET /a1-policy

Received get request for a1-policy, params: nil

Test 2: Testing GET /a1-policy/1001

Received get request for a1-policy, params: id=1001

Test 3: Testing PUT /a1-policy/1002/xyz

Received put request for a1-policy, params: id=1002, data=xyz

Test 4: Testing DELETE /a1-policy/1001

Received delete request for a1-policy, params: id=1001

Test 5: Testing POST /a1-policy

Received post request for a1-policy, params: id=1003, data=abc

pms_viewer role:

Test 1: Testing GET /a1-policy

Received get request for a1-policy, params: nil

Test 2: Testing GET /a1-policy/1001

Received get request for a1-policy, params: id=1001

Test 3: Testing PUT /a1-policy/1002/xyz

RBAC: access denied

Test 4: Testing DELETE /a1-policy/1001

RBAC: access denied

Test 5: Testing POST /a1-policy

RBAC: access denied

You can also leave out the from clause and just use to and when in the rules:

rules:

- to:

- operation:

methods: ["GET", "POST", "PUT", "DELETE"]

paths: ["/a1-policy*"]

when:

- key: request.auth.claims[role]

values: ["pms_admin"]

- to:

- operation:

methods: ["GET"]

paths: ["/a1-policy*"]

when:

- key: request.auth.claims[role]

values: ["pms_viewer"]

Istio network policy is enforced at the pod level (in the Envoy proxy), in user-space, (layer 7), as opposed to Kubernetes network policy, which is in kernel-space (layer 4), and is enforced on the host. By operating at application layer, Istio has a richer set of attributes to express and enforce policy in the protocols it understands (e.g. HTTP headers).

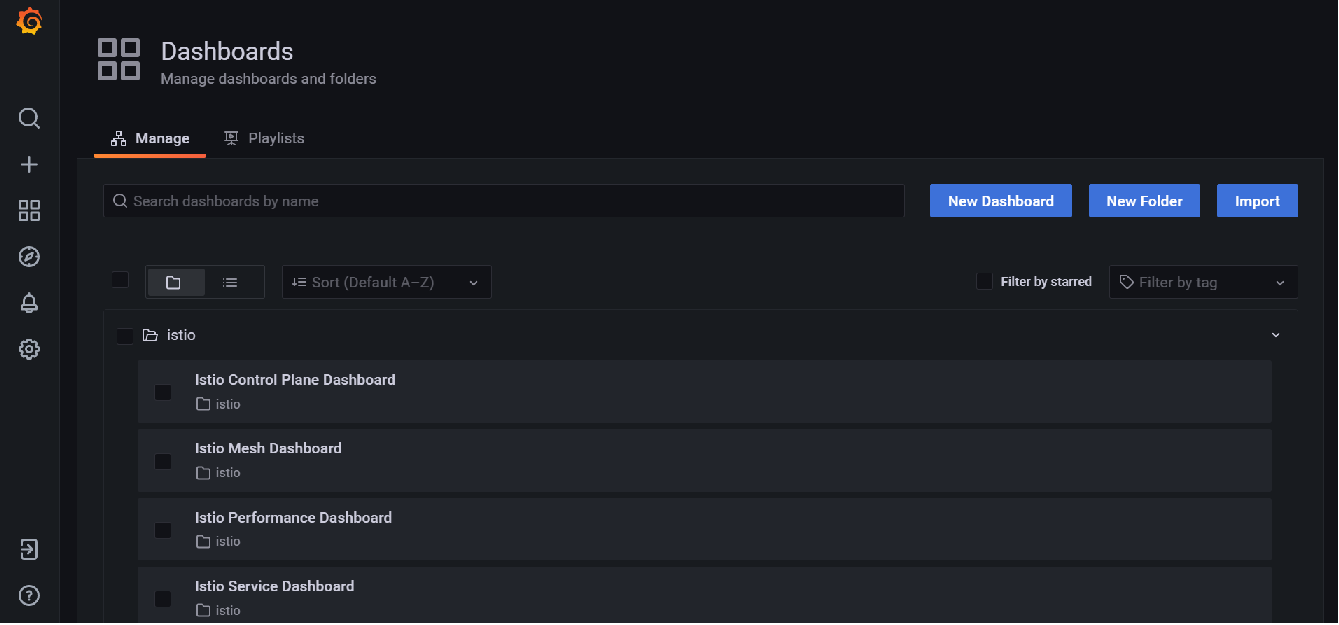

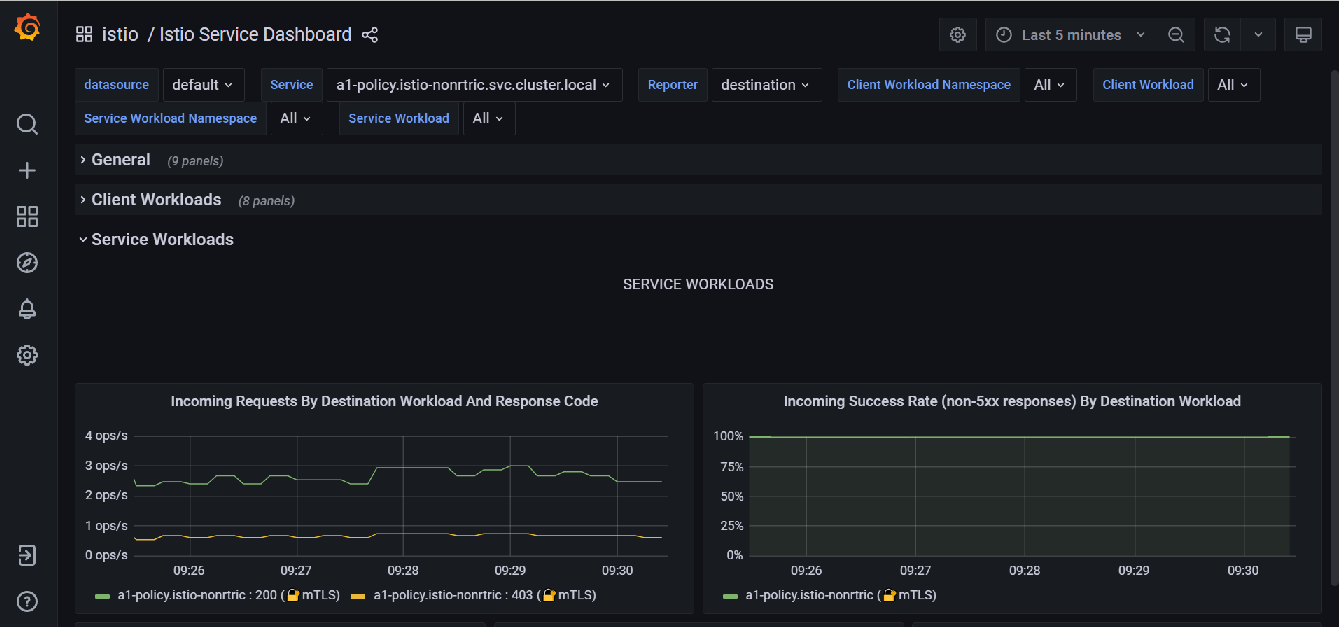

Grafana

Istio also comes with grafana, to start it run : istioctl dashboard grafana

This will bring up the grafana home page

From the side menu select dashboards →Manage

The istio dashboards are installed by default

Select the Istio Service dashboard → service workloads to see the incoming requests

OAuth2 Proxy

Welcome to OAuth2 Proxy | OAuth2 Proxy (oauth2-proxy.github.io)

Calico network policy

https://docs.projectcalico.org/security/calico-network-policy

Calico can be used with Istio to enforce network policies : Enforce Calico network policy using Istio

For example we can limit connections to the keycloak database to only pods using the keycloak service account

apiVersion: projectcalico.org/v3

kind: GlobalNetworkPolicy

metadata:

name: postgres

spec:

selector: app == 'postgres'

ingress:

- action: Allow

source:

serviceAccounts:

names: ["keycloak"]

egress:

- action: Allow

Following the example in the link above I installed the test application in a separate namespace (calico-test). Using curl I was able to access the database prior to applying the GlobalNetworkPolicy. After applying the policy the request timed out rather than return a 403 forbidden message.

Logging

We can use elasticsearch, kibana and fluentd to aggregate and visualize the kubernetes logs.

You can use the following files to setup a single node ELK stack on minikube

- elastic.yaml includes a persistence volume that mounts the /usr/share/elasticsearch/data directory to a host path. This prevents loss of data when the pod is restarted. (You may ned to change the hostPath path value to a directory on your own host)

- Both elastic.yaml and kibana.yaml contain a config map for configuring the component on start up.

- xpack.security.enabled is set to true to enable security.

- This is a single-node minkube setup, you may want to alter this for your own installation.

Please ensure to create the logging namespace before applying these files.

Once elasticsearch is up and running, log into kibana and create a new index for the logstash-* pipeline.

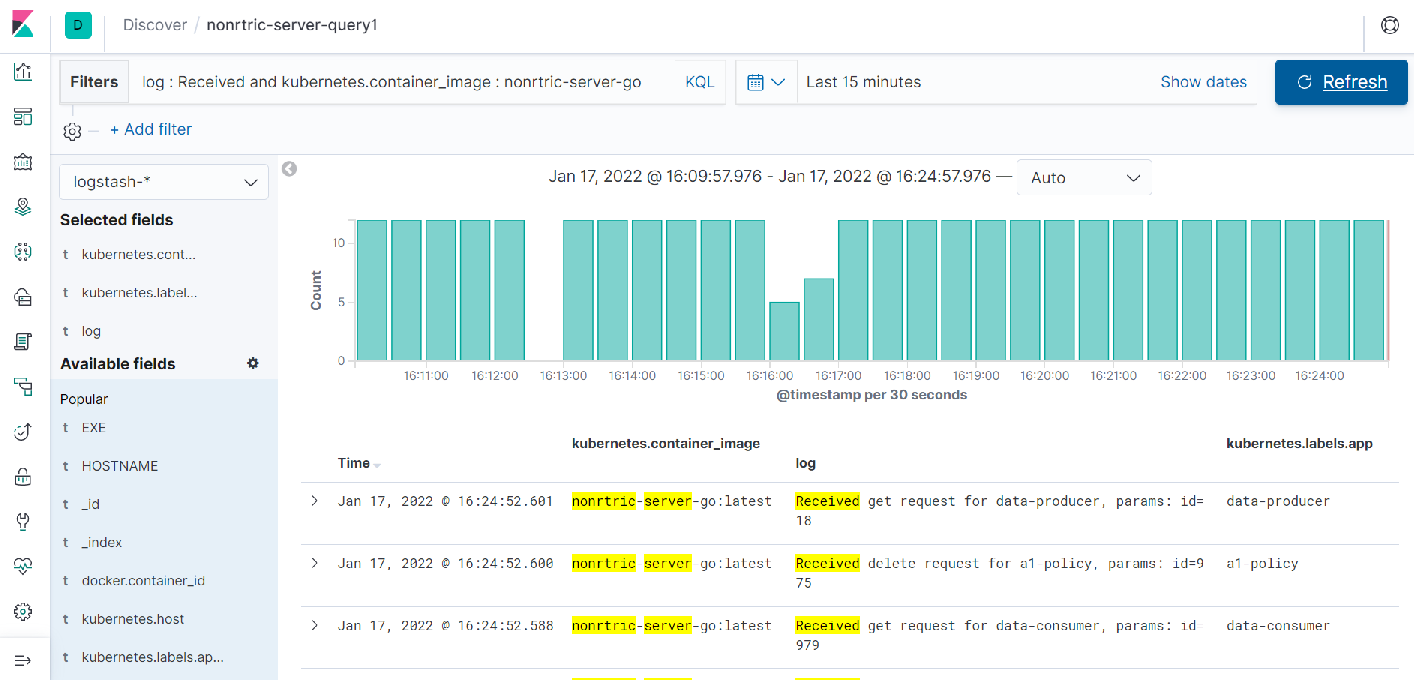

Once this is done use the discover tab to create a query against your logs:

Select the timeStamp, kubernetes.container_image, log and kuberentes.label.app for you fields

Use the filters input textbox to only show the logs you want → log : Received and kubernetes.container_image : nonrtric-server-go

Change the date field to the last 15 minutes.

Save your query.

This will produce a report like the following:

This shows the number of requests made to the nonrtric-server-go.

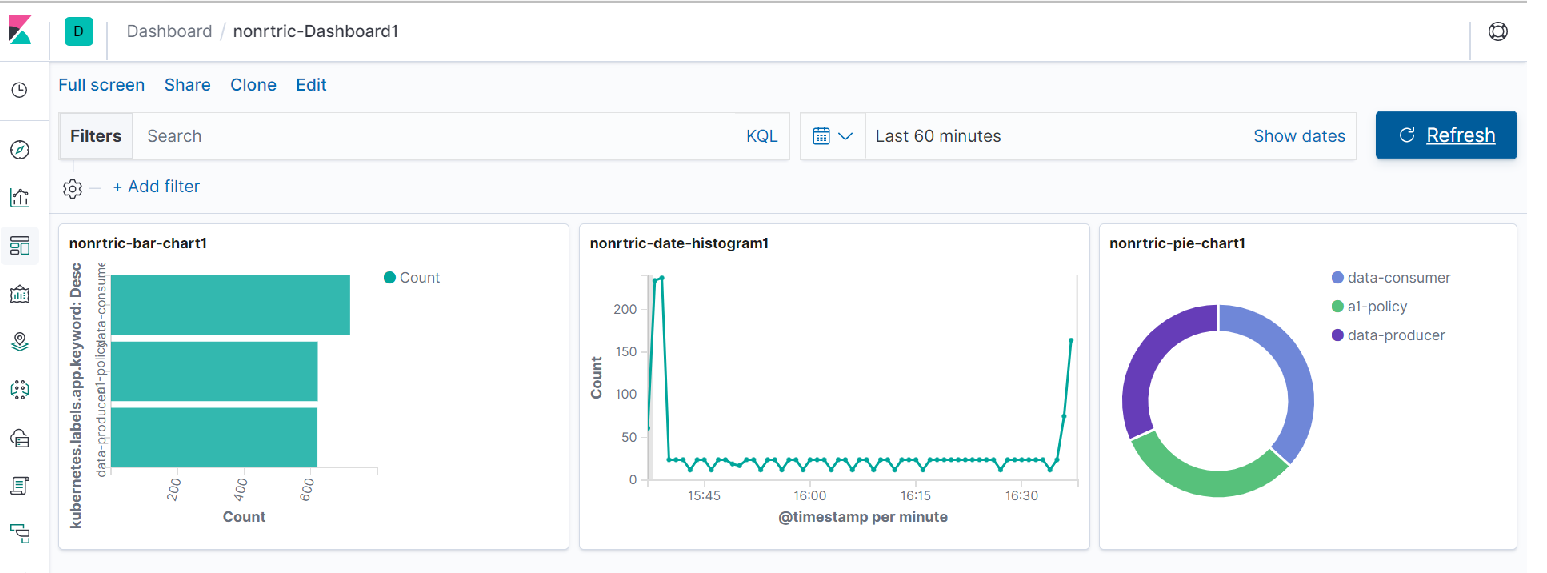

Go to the visualize tab.

Here you can create different charts to display your data and then add them to a dashboard.

Here you can see 3 graphs, the first one shows the number of requests received by each NONRTRIC componet in he last 60 minutes.

The second one is a histogram showing the total number of requests broken down by timeStamp.

The last one is a pie chart showing the distribution of requests across components.

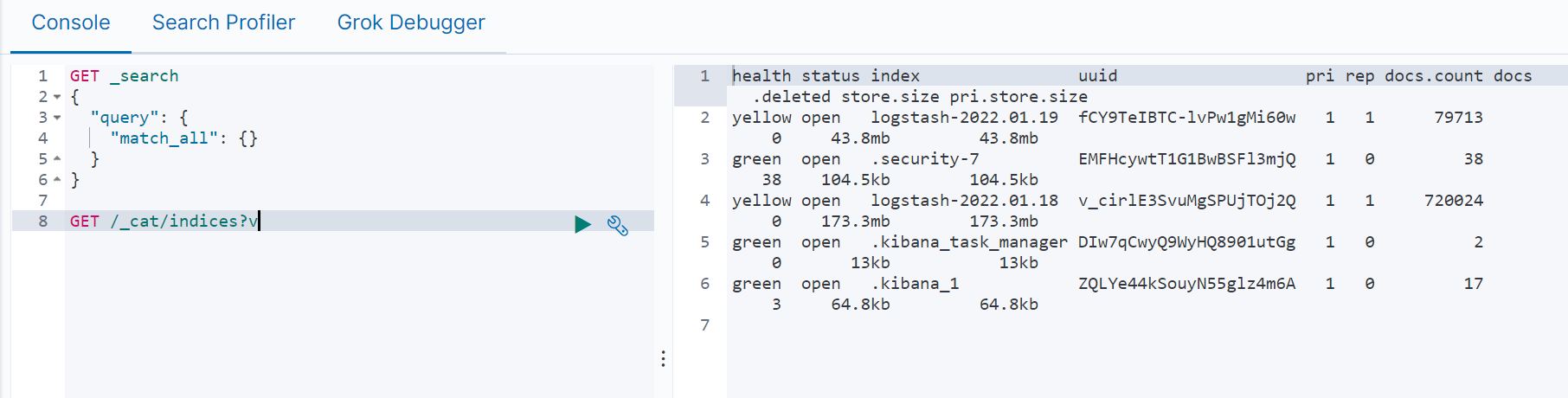

Click the Dev tools tab to use the elasticsearch console

Run GET /_cat/indices?v to see the list of indices currently in use

You can delete indices that are no longer required by running the following command:

DELETE /<index name> e.g. DELETE /logstash-2022.01.18

You can create a policy to remove logstash indices older than 1 day

PUT /_ilm/policy/cleanup_policy

{

"policy": {

"phases": {

"hot": {

"actions": {}

},

"delete": {

"min_age": "1d",

"actions": { "delete": {} }

}

}

}

}

PUT /logstash-*/_settings

{ "lifecycle.name": "cleanup_policy" }

PUT /_template/logging_policy_template

{

"index_patterns": ["logstash-*"],

"settings": { "index.lifecycle.name": "cleanup_policy" }

}

Quick Installation Guide

- Download and install istio: istioctl install --set profile=demo

- cd to the samples/addons/ directory and install the dashboards e.g. kubectl create -f kiali.yaml

- Install postgres: istioctl kube-inject -f postgres.yaml | kubectl apply -f - (change the hostPath path value to a path on your host)

- Install keycloak: istioctl kube-inject -f keycloak.yaml | kubectl apply -f -

- Open the keycloak admin console and setup the required realms, users and clients

- Setup the "pms_admin" and "pms_viewer" roles for pmsuser and pmsuser2 respectively.

- Install Release E: Coordinated Service Exposure: docker build -t nonrtric-server-go:latest .

- Create the istio-nonrtric namespace: kubectl create namespace istio-nonrtric

- Enable istio for the istio-nonrtric namespace: kubectl label namespace istio-nonrtric istio-injection=enabled

- Edit the istio-test.yaml so the host ip specified matches yours.

- Also change the userid in the requestPrincipals field to match yours

- Install istio-test.yaml : kubectl create -f istio-test.yaml

- Install Release E: Coordinated Service Exposure: docker build -t nonrtric-client-go:latest .

- Install the test client: istioctl kube-inject -f client.yaml | kubectl apply -f -

- Open the kiali dashboard to check your services are up and running

- Open the grafana to view the istio dashboard

- Optionally install Release E: Coordinated Service Exposure

ONAP

ONAP Next Generation Security & Logging Architecture